The contemporary discourse on Artificial Intelligence is defined by a significant tension between two divergent perspectives on risk. One narrative, often capturing significant public and media attention, centers on future-oriented, large-scale existential threats. This perspective is articulated in cautionary statements from prominent organizations, such as the Future of Life Institute, which questions the wisdom of developing nonhuman intelligence that could

“Should we let machines flood our information channels with propaganda and untruth? Should we automate away all the jobs, including the fulfilling ones? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization?”

Similarly, the Center for AI Safety states that

"Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war."

In stark contrast to these speculative, high-impact scenarios, a second narrative focuses on the concrete and immediate harms already manifesting from deployed AI systems. These present-day risks include well-documented issues such as:

- algorithmic discrimination, where systems perpetuate and amplify societal biases;

- pervasive privacy violations stemming from the mass collection and analysis of personal data;

- the substantial environmental footprint associated with the energy-intensive processes of training and operating large-scale AI models.

It is this landscape of tangible and emerging harms that has directly motivated major regulatory interventions, most notably the European Union’s AI Act. While this legislation introduces a foundational tiered approach to risk management, it operates on a conceptualization of risk that warrants a more profound and granular analysis. This academic exploration, therefore, adopts an epistemological approach, drawing upon the multi-component frameworks used in natural disaster analysis to deconstruct and more thoroughly understand the constituent elements of AI-related risk, offering a more nuanced path toward effective mitigation.

Regulatory Frameworks and the European AI Act

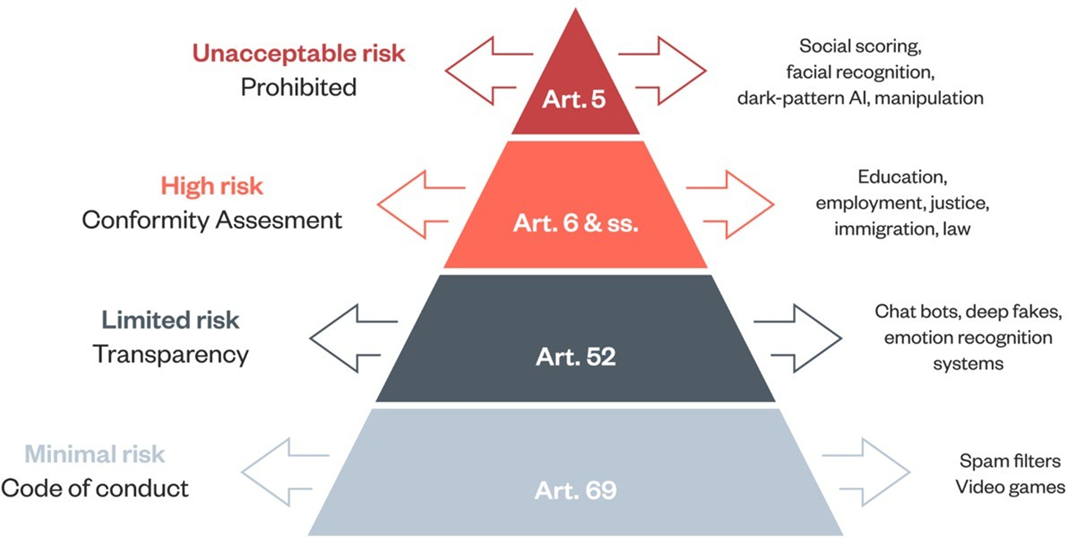

The conventional technical approach to risk assessment is rooted in a definition that considers risk to be a function of two primary variables: the probability of an adverse event occurring and the magnitude of its potential consequences. This classical model, while widely adopted and foundational to regulatory instruments like the EU AI Act, presents considerable challenges when applied to the unique complexities of artificial intelligence. The opacity and emergent behaviors of many advanced AI systems make the reliable calculation of event probability exceedingly difficult, if not impossible. Despite this limitation, the EU AI Act leverages this risk-based philosophy to construct a hierarchical regulatory framework, categorizing AI systems into four distinct tiers, each subject to a different level of legal scrutiny. This structure is designed to apply the most stringent controls to the applications posing the greatest danger to public safety and fundamental rights.

The four-tiered pyramid of the AI Act is structured as follows, moving from the most to the least regulated applications:

| Risk Level | Regulatory Approach | Examples of AI Systems |

|---|---|---|

| Unacceptable Risk | Prohibited | Social scoring by governments, real-time remote biometric identification in public spaces (with narrow exceptions), manipulative AI that exploits vulnerabilities. |

| High Risk | Conformity Assessment & Strict Requirements | AI used in critical infrastructure, education, employment, justice, law enforcement, immigration, and medical devices. |

| Limited Risk | Transparency Obligations | Chatbots, deepfakes, and emotion recognition systems, which must inform users that they are interacting with an AI. |

| Minimal Risk | Voluntary Codes of Conduct | Spam filters, AI-enabled video games. |

While this legislative structure provides a crucial and pragmatic tool for governance, its focus on categorizing risk levels does not inherently analyze the underlying factors—the how and why—that generate these risks. A more robust analytical model is therefore necessary to understand the distinct dimensions contributing to the overall risk profile of an AI system.

Multi-Component Framework for Analyzing Risk

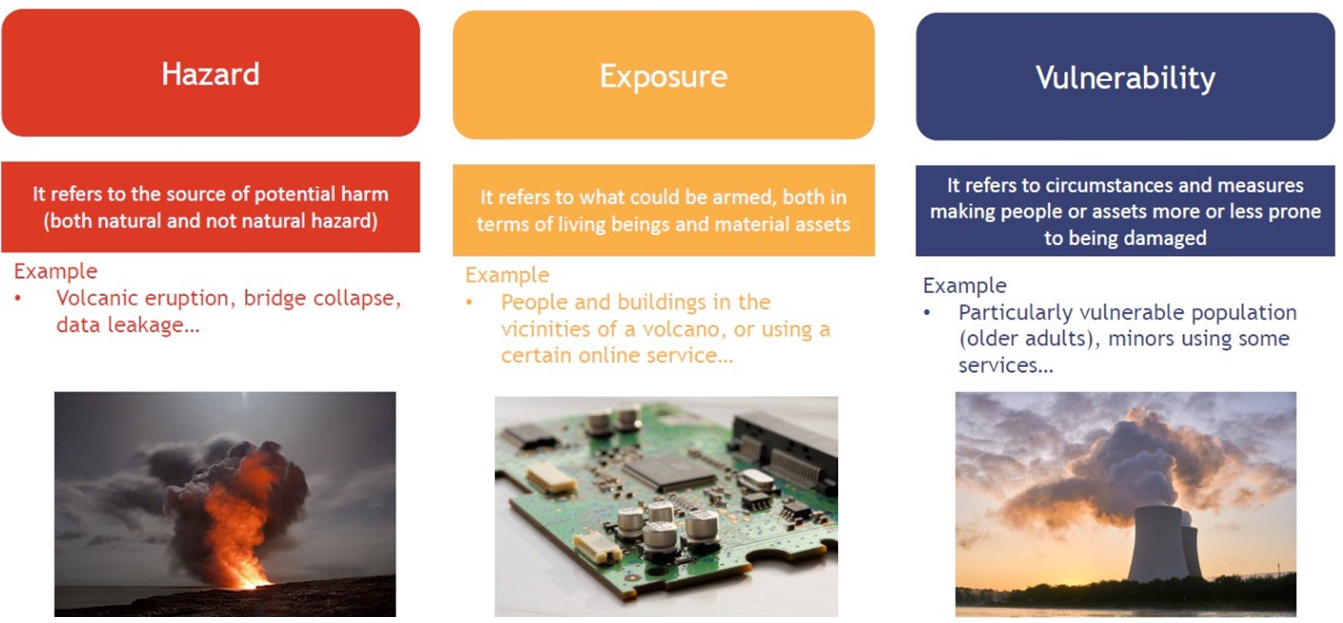

To construct a more robust and comprehensive model for understanding AI-related harms, it is instrumental to borrow analytical tools from more established fields, such as natural risk analysis. This approach allows for the disaggregation of the abstract concept of risk into three distinct yet interconnected core components: Hazard, Exposure, and Vulnerability.

The first component, Hazard, refers to the intrinsic source of potential harm, encompassing both natural phenomena like a volcanic eruption and technological failures such as a data breach or a systematically biased algorithm. Complementing this is Exposure, which defines the scope of what could be harmed, including the populations, material assets, or critical systems situated in the path of a given hazard. The relationship between these two is crucial, as even a minimal hazard can precipitate a major catastrophe if the level of exposure is sufficiently high. The final component, Vulnerability, describes the inherent susceptibility of the exposed entities to be damaged. This dimension accounts for the specific circumstances and preexisting conditions—such as a lack of protective infrastructure, socioeconomic disadvantages, or specific demographic characteristics—that render a population or system more or less prone to harm when a hazard materializes.

By analyzing these components separately, risk mitigation becomes a more strategic endeavor. While reducing or eliminating a natural hazard is typically impossible, technological hazards can often be directly addressed through interventions like outright prohibition or market withdrawal. Likewise, overall risk can be managed by reducing exposure, for instance, by limiting a system’s deployment to non-critical areas, or by reducing vulnerability through the implementation of protective measures like enhanced cybersecurity or specialized user training.

Hazard-Critical Systems

In certain applications, the risk profile is overwhelmingly dominated by the intrinsic hazard of the system itself. These are “hazard-critical” systems, which are deployed in high-stakes contexts where any malfunction, error, or bias can lead to severe and irreversible consequences.

Example

Prime examples include medical AI, where a diagnostic error could result in a fatal outcome; predictive justice systems, where an algorithmic bias can lead to unjust incarceration and the violation of fundamental legal rights; and autonomous weapons systems, where a failure in target identification can result in the loss of innocent lives.

In these cases, the sheer magnitude of the potential harm is the primary driver of the system’s risk profile.

Exposure-Critical Systems

In contrast, other AI systems are better understood as “exposure-critical.” For these systems, the intrinsic hazard of a single operational instance may be relatively low, but the risk becomes critical due to the massive scale of their deployment.

Example

The archetypal example is the recommender system embedded within large online platforms and social networks. An individual content recommendation is not, in isolation, a life-or-death matter. However, when this seemingly benign hazard is amplified across a user base of billions, its potential for large-scale societal influence—through mechanisms like fostering addiction, propagating misinformation, and enabling widespread manipulation—transforms it into a significant systemic risk.

Here, the critical factor is not the severity of an individual event but the cumulative impact driven by immense exposure.

Vulnerability-Critical Systems

A third category of AI systems can be defined as “vulnerability-critical,” where the risk is predominantly a function of the specific population with which the system interacts. This category includes technologies like affective computing and social robotics, which are increasingly deployed in sensitive contexts such as elderly care and special needs education.

The users in these scenarios—older adults, children, or individuals with cognitive impairments—are prototypically vulnerable populations. The primary risk is not necessarily a catastrophic malfunction but the potential for more subtle harms like emotional manipulation, the fostering of unhealthy dependency, or the erosion of human dignity, all of which are significantly amplified by the inherent vulnerability of the users. This multi-faceted analysis demonstrates that a one-dimensional, hazard-focused view of AI risk is profoundly incomplete.

Example

For instance, a deepfake, categorized as “limited risk” under the EU AI Act, can become extremely risky if its exposure is high, as in a political campaign. Similarly, an emotion recognition system can become critical if used on a vulnerable population, such as job applicants, thereby highlighting the necessity of a more granular and context-aware approach to risk assessment.

Modern AI as Experimental Technology

While the multi-component framework of hazard, exposure, and vulnerability provides a necessary analytical structure, its application is significantly complicated by the inherent nature of advanced AI systems, which are frequently characterized by deep and irreducible uncertainty.

To understand this challenge, one must look to the contemporary definition of these technologies. The OECD defines an AI system as

Cite

a machine-based system that is capable of influencing the environment by producing an output (predictions, recommendations or decisions) for a given set of objectives. It uses machine and/or human-based data and inputs to

- perceive real and/or virtual environments;

- abstract these perceptions into models through analysis in an automated manner (e.g., with machine learning), or manually; and

- use model inference to formulate options for outcomes. AI systems are designed to operate with varying levels of autonomy.

Crucially, these systems display varying levels of autonomy and adaptiveness following deployment. A defining and problematic characteristic of many modern systems, particularly those based on deep learning, is their opacity. Often referred to as the “black box” problem, the internal representational states and decision-making processes of these models are not fully intelligible, even to their developers. This lack of interpretability makes predicting their behavior in novel, real-world situations inherently difficult.

Consequently, many state-of-the-art AI systems should be epistemologically categorized as experimental technologies. Unlike established engineering disciplines where failure modes are well-understood and quantifiable,

Cite

“The introduction of such technologies into society comes with large uncertainties, unknowns and indeterminacies that are often only reduced once such technologies are actually introduced into society.”

“Risks and benefits of experimental technologies may not only be hard to estimate and quantify, sometimes they are unknown.”

van de Poel 2016

Therefore, a comprehensive ex-ante (pre-deployment) estimation of their full spectrum of risks and benefits is often impossible. Many risks only manifest as emergent properties once the system interacts with the complexities of the real world. This issue is particularly acute with General-Purpose AI Systems (GPAIS), such as Large Language Models (LLMs). Because these systems are designed to be flexible and applicable to a vast, often unanticipated array of purposes, they are inherently multi-hazard. The combination of potential system errors (such as hallucinations) and malicious human misuse can result in a proliferation of harms ranging from the generation of malware to the automated spread of disinformation. Given this multi-purpose nature, conducting a complete and exhaustive risk assessment prior to deployment is not only difficult but practically impossible.

Ethical Incrementalism in Governance

Given the experimental nature of these technologies and the deep uncertainty surrounding their operational behavior, a paradigm shift in risk management and regulatory governance is required. Rather than attempting the futile task of predicting and mitigating every conceivable hazard ex-ante, a more pragmatic approach involves identifying broad “areas of concern.” These are specific high-stakes domains or contexts of use where the deployment of powerful, opaque AI systems should be strictly limited or subjected to rigorous oversight due to the severity of potential failure. For instance, acknowledging the inherent potential for error in Large Language Models, their direct use in critical medical diagnostic contexts could be designated as a primary area of concern. This strategic shift moves the regulatory focus from the impossible task of eliminating the hazard within the model itself to the manageable task of controlling the context of its deployment.

Effective governance must, therefore, systematically incorporate the concept of uncertainty into its operative framework. This necessitates the adoption of a model of Ethical Incrementalism, which fundamentally rejects a “release-and-forget” approach in favor of a dynamic, iterative process. This model is predicated on the gradual and incremental introduction of AI systems into society. By deploying systems in controlled stages—for instance, within regulatory sandboxes—stakeholders can monitor for emerging societal effects and utilize this feedback to iteratively improve system design before a broader release. Furthermore, because many systemic impacts only become visible at scale, continuous monitoring is essential. This allows regulators to dynamically manage and intervene regarding the exposure component of risk as usage patterns evolve.

Finally, this framework demands a heightened ethical focus on protecting vulnerable subjects and contexts, recognizing that the negative externalities of uncertainty often disproportionately affect those with the least capacity to withstand harm. This staged, adaptive approach aligns with international standards, such as the OECD AI Principles, which call for strict accountability and a “systematic risk management approach to each phase of the AI system lifecycle on an ongoing basis.”

Toward an Adaptive Governance of AI Risk

The conceptualization of AI-related risk is undergoing a necessary and profound evolution. A simplistic, hazard-centric perspective, which treats risk as a static and inherent property of the technology itself, is demonstrably insufficient for capturing the complex, dynamic, and context-dependent ways in which AI systems can generate harm. By adopting a multi-component analysis that systematically disaggregates risk into its constituent parts—hazard, exposure, and vulnerability—a far richer, more granular, and ultimately more actionable understanding emerges. This analytical framework provides a superior diagnostic tool, revealing that the overall risk profile of a system can be amplified just as much by the scale of its deployment or the inherent susceptibility of its user base as by any intrinsic technological flaw.

This framework, however, reaches its epistemological limits when confronted with the most novel, experimental, and general-purpose AI systems. For these technologies, which are characterized by opacity and emergent behaviors, we must transcend the traditional paradigms of risk assessment that presume a degree of predictability that simply does not exist. The profound uncertainty inherent in these systems necessitates a fundamental shift in our approach to governance. This requires the development and implementation of new ethical and regulatory frameworks explicitly designed to operate under conditions of uncertainty. These frameworks must be built upon the principles of Ethical Incrementalism, embracing a more cautious and iterative model for technological deployment.

The core tenets of this approach include a commitment to the gradual and controlled introduction of new systems, the continuous post-deployment monitoring of their societal impacts, and a steadfast institutional commitment to identifying and protecting the most vulnerable populations from emergent harms.

It is only through such a nuanced, dynamic, and adaptive approach that society can hope to navigate the dual imperative of fostering technological innovation while responsibly managing its profound and multifaceted challenges.