TL;DR: Cache Coherence Protocols

Cache coherence ensures that all processors in a multiprocessor system have a consistent view of shared memory. When multiple caches hold copies of the same data, protocols are needed to manage updates and prevent stale data reads. The two primary approaches are snooping and directory-based protocols.

Snooping Protocols

- Best for: Smaller-scale systems with a shared bus.

- Mechanism: Each cache controller “snoops” on a common bus, monitoring all memory transactions. When a write to a shared block occurs, controllers react based on the protocol.

- Key Strategies:

- Write-Invalidate: A processor wanting to write to a block first broadcasts an invalidation message, forcing all other caches to discard their copies. This is efficient for multiple writes by the same processor. The MSI and MESI protocols are common examples, using states (Modified, Shared, Invalid, and Exclusive for MESI) to track block status.

- Write-Update: A processor broadcasts the updated data to all other caches, which then update their copies. This reduces read latency but consumes more bus bandwidth.

- Limitation: Relies on a broadcast medium (the bus), which becomes a bottleneck as the number of processors increases.

Directory-Based Protocols

- Best for: Large-scale, scalable systems (e.g., Distributed Shared Memory).

- Mechanism: A centralized or distributed directory maintains the state and sharing information for each memory block. It tracks which processors have a copy of a block and whether that copy is modified.

- How it works: Instead of broadcasting, a processor’s request goes to the block’s “home” directory. The directory then sends point-to-point messages to the specific nodes involved (e.g., to invalidate sharers or fetch data from the owner).

- Key States:

- Uncached (U): Not cached by any processor.

- Shared (S): One or more processors have a clean copy.

- Modified (M): Exactly one processor has a modified copy.

- Key Messages: Communication relies on targeted messages:

- Read/Write Miss: A processor sends a request to the home directory.

- Invalidate: The directory tells specific sharers to discard their copies.

- Fetch / Fetch/Invalidate: The directory requests the latest data from the current owner.

- Data Reply / Write Back: Messages used to transfer the actual data block between nodes.

- Advantage: Avoids the broadcast bottleneck of snooping protocols, making it much more scalable.

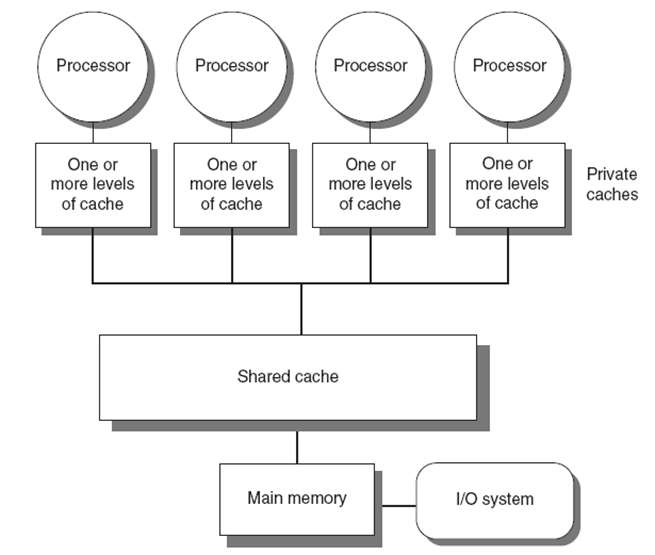

Caches play a pivotal role in the performance and scalability of multiprocessor (MP) systems. By providing localized storage for frequently accessed data, caches reduce average memory access latency and help alleviate contention for shared memory resources. In multiprocessor environments, where several cores may simultaneously request access to the same data, having individual caches allows each core to serve its requests independently, thereby improving throughput and reducing bottlenecks.

However, caching shared data introduces new challenges, particularly the problem of cache coherence. When multiple cores cache the same memory location, the system must ensure that all copies remain consistent with one another.

For example, if one processor modifies a value in its local cache, that change must be propagated or reflected across all other caches that store the same data.

Without coherence mechanisms, stale data could be read, leading to incorrect program behavior.

Two key mechanisms help address this: migration and replication of data items.

- Migration allows data to be moved from a remote memory or cache location to the local cache of a requesting core, providing faster access and reducing interconnect bandwidth usage.

- Replication allows multiple read-only copies of the same data to exist across different caches. This is especially useful when multiple processors need to read the same value, as it minimizes contention and access latency. However, replication introduces consistency overhead when any of the copies are modified, since all other instances must be updated or invalidated.

Cache Coherence Protocols

To enforce coherence in hardware, systems employ cache coherence protocols, which define rules for maintaining consistent views of shared data across caches. The fundamental task of such protocols is to track the sharing state of each cache block and ensure that changes are propagated correctly across the system.

Two major classes of cache coherence protocols exist: snooping protocols and directory-based protocols.

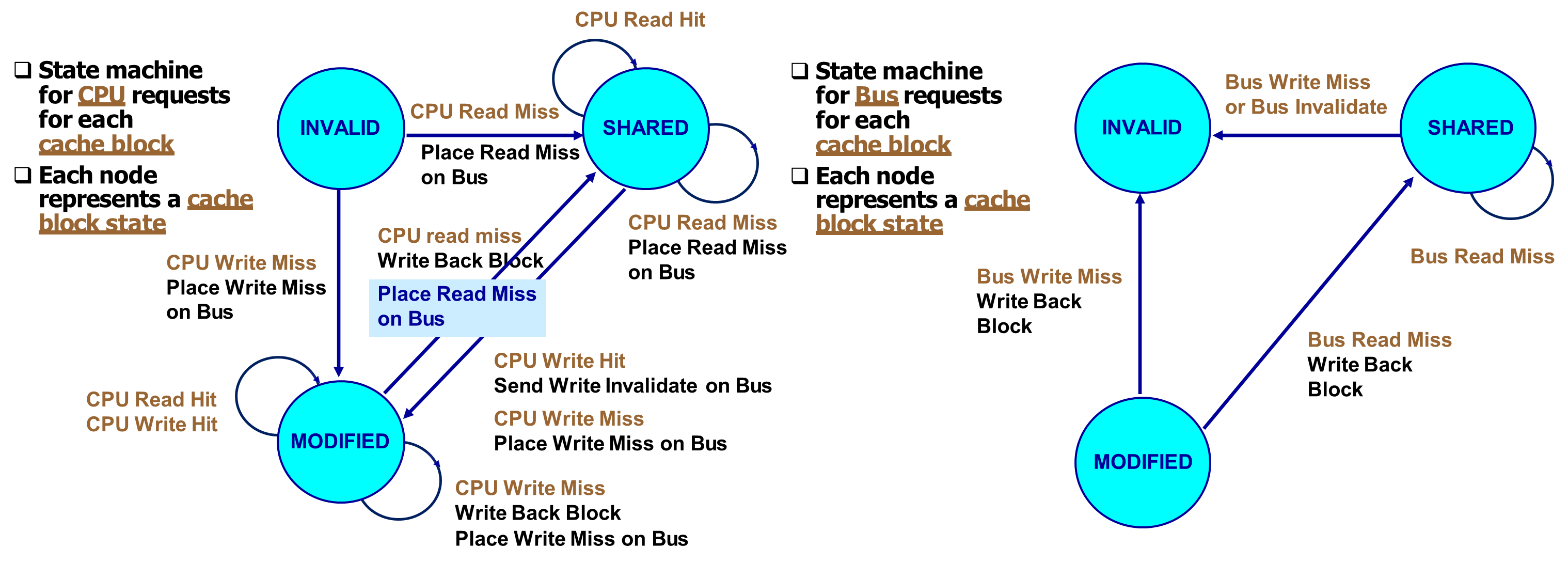

- In snooping protocols, each cache controller monitors a common communication medium—typically a bus or interconnect—to “snoop” on memory transactions issued by other processors. When a write operation is observed, the controller reacts appropriately, either by invalidating its own stale copy or updating it.

- In directory-based protocols, a centralized or distributed directory keeps track of which caches hold copies of each memory block. This directory coordinates updates and invalidations to maintain coherence, allowing for more scalable designs in larger systems.

Snooping Protocol

A thorough understanding of memory consistency necessitates distinguishing it from memory coherence, particularly in terms of their operational scope.

- Coherence pertains to individual memory locations, ensuring that the most recent write to a location becomes visible to all processors.

- Consistency governs the behavior of memory operations across multiple addresses, defining the rules by which updates become visible relative to one another.

In strict consistency models, a write must not be considered complete until it is visible to all processors, and the global ordering of writes must be preserved.

For example, if a processor writes to location

and then to , any processor that observes the updated value of must also see the updated value of .

These guarantees, while simplifying parallel programming, impose considerable demands on processor and memory subsystems. Coherence protocols, such as those based on snooping, are vital in maintaining a consistent view of memory in multiprocessor environments. Snooping-based protocols, commonly used in small-scale symmetric multiprocessors (SMPs), leverage a shared communication medium—typically a snoopy bus.

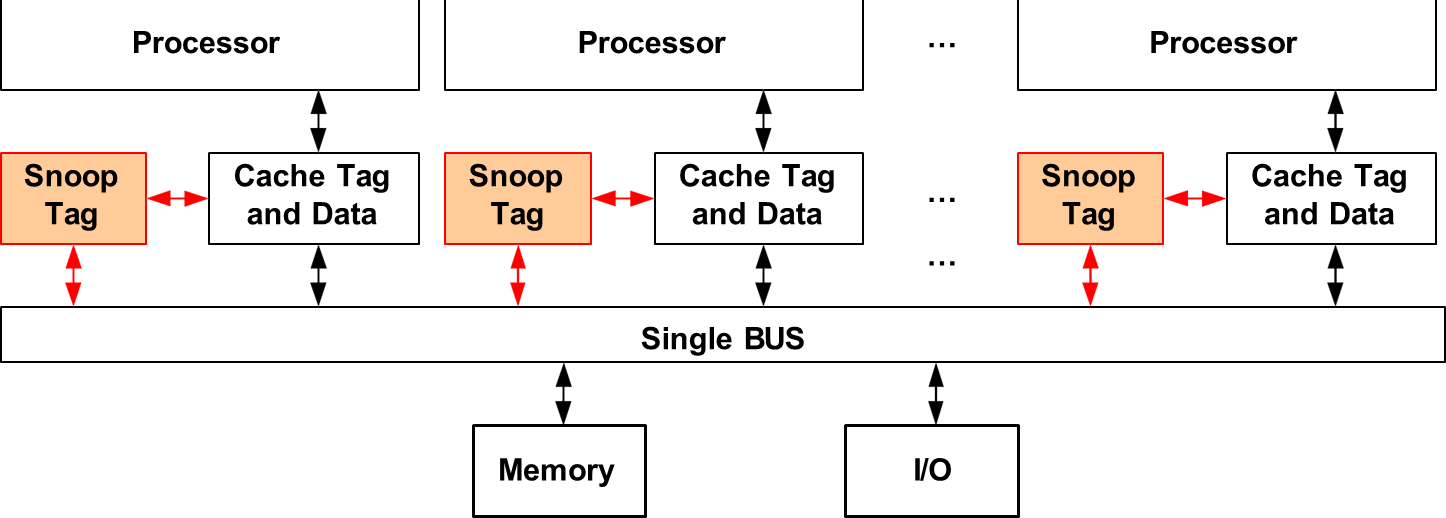

All cache controllers monitor bus transactions and respond accordingly, either by invalidating, updating, or supplying the requested data. Notably, these protocols operate without a centralized directory, relying instead on decentralized metadata and broadcast communication.

Despite their architectural simplicity, snooping protocols introduce challenges, such as potential interference with processor-cache communication. To mitigate this, modern systems often duplicate only cache tags and employ dedicated snooping ports. Write operations follow either a write-invalidate or write-update strategy, each defining distinct coherence behaviors. Together, coherence and consistency mechanisms form the foundation for correctness in parallel systems.

Commercial Trends

Most commercial cache-based multiprocessors favor a write-back cache policy to reduce the volume of memory traffic. Coupled with this, the write-invalidate protocol is typically employed to manage cache coherence efficiently. This combination strikes a balance between performance and scalability, allowing more processors to operate concurrently on a single bus without saturating it with frequent write broadcasts.

One critical aspect of these protocols is write serialization. Since the bus acts as a single arbitration point, it enforces a total order on write operations, ensuring that all processors observe updates in the same sequence. However, bus arbitration itself becomes a performance bottleneck as the system scales.

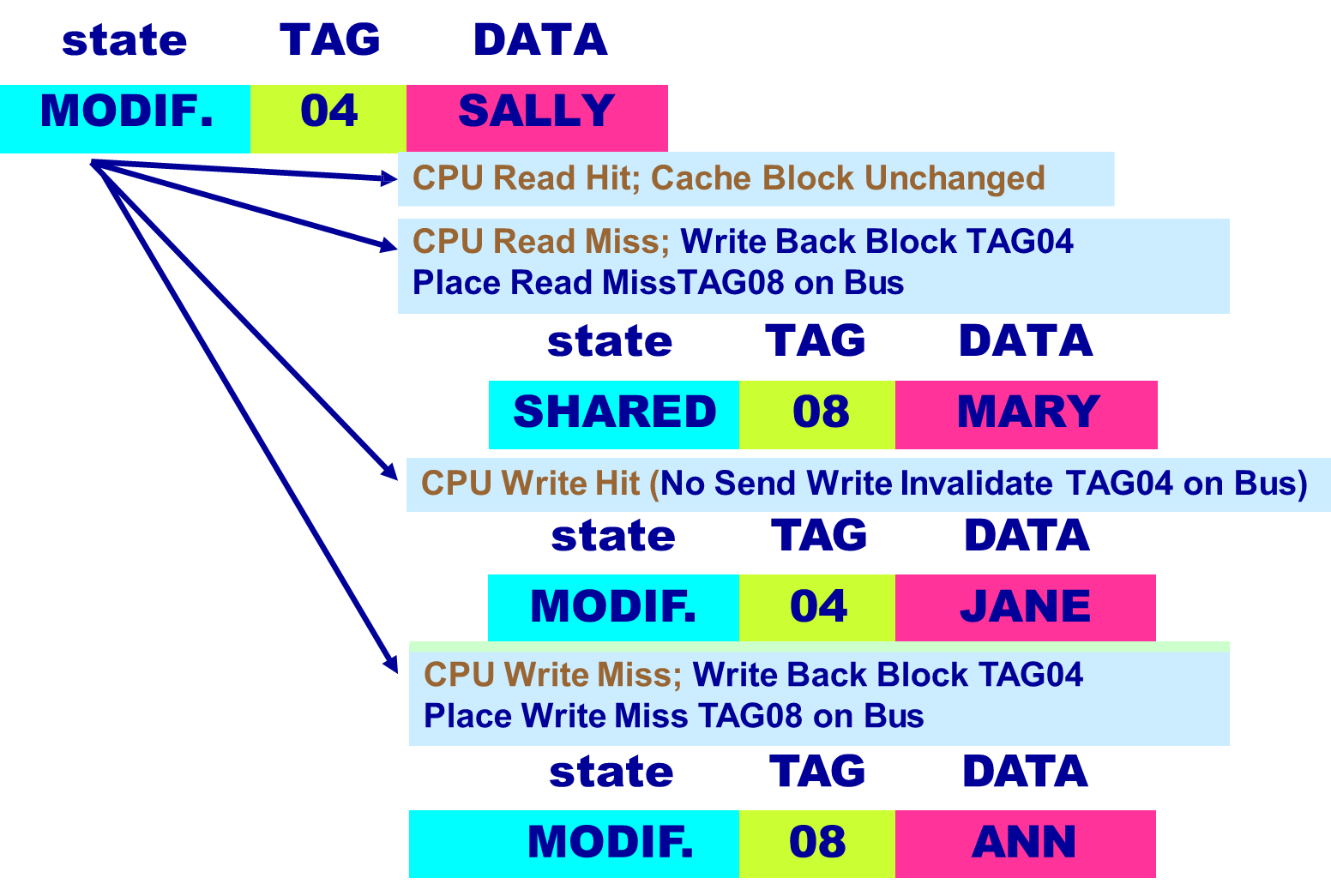

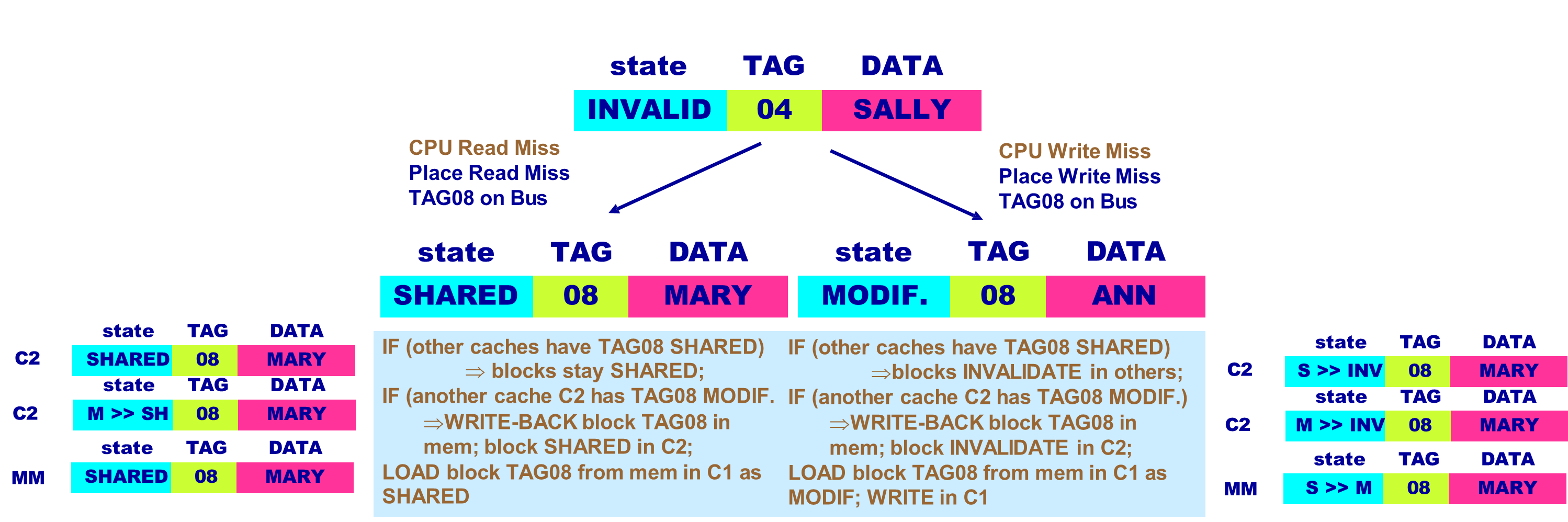

Write-Invalidate Protocol

The write-invalidate approach is based on the principle that a processor intending to modify a shared data block must first invalidate all other copies of that block residing in other caches. When a processor issues a write, it broadcasts an invalidation message across the bus, instructing all other caches to mark their copy of the block as invalid. After ensuring exclusivity, the writing processor proceeds to update its local copy. This mechanism allows multiple readers but only a single writer, thereby maintaining coherence.

Importantly, the bus is used only for the first write operation that invalidates other copies.

Subsequent writes do not generate additional bus traffic, as the writing processor holds the only valid copy. This property is advantageous in reducing bus congestion and resembles the behavior of write-back caches, where updates are accumulated locally before being written back to memory.

In the case of read misses, the snooping protocol behaves differently depending on the memory update policy. Under write-through, the memory always holds the latest version of the data. Under write-back, the protocol must locate the most recent copy of the data by snooping into caches, which may contain updated blocks not yet reflected in main memory.

Write-Update Protocol

In contrast, the write-update protocol requires that every write operation to a shared data block be broadcast to all caches that may contain a copy of that block. Upon receiving the update, each cache replaces its old value with the new one. Unlike the invalidate approach, the update scheme allows multiple caches to hold valid and consistent copies of the block after a write.

While this method reduces the time it takes for updated values to become visible across processors—thus lowering latency—it imposes heavier demands on the bus, as each write must be propagated to all relevant caches.

This behavior is analogous to write-through memory systems, where every modification is immediately sent to the backing memory and all relevant caches. As such, this protocol tends to be less bandwidth-efficient compared to write-invalidate, especially under frequent write conditions.

MSI Snooping Protocol

An important example of a snooping protocol is the MSI protocol, which is based on a write-invalidate strategy combined with a write-back cache. In MSI, each cache block can exist in one of three states:

- Modified (M): The cache has the only copy, which has been altered and is not synchronized with memory. This block cannot be shared.

- Shared (S): The cache block is clean and may be shared among multiple caches. The data is consistent with memory.

- Invalid (I): The block contains no valid data.

Each block in memory is accordingly marked as either shared and up-to-date (clean), modified in a single cache (dirty), or uncached.

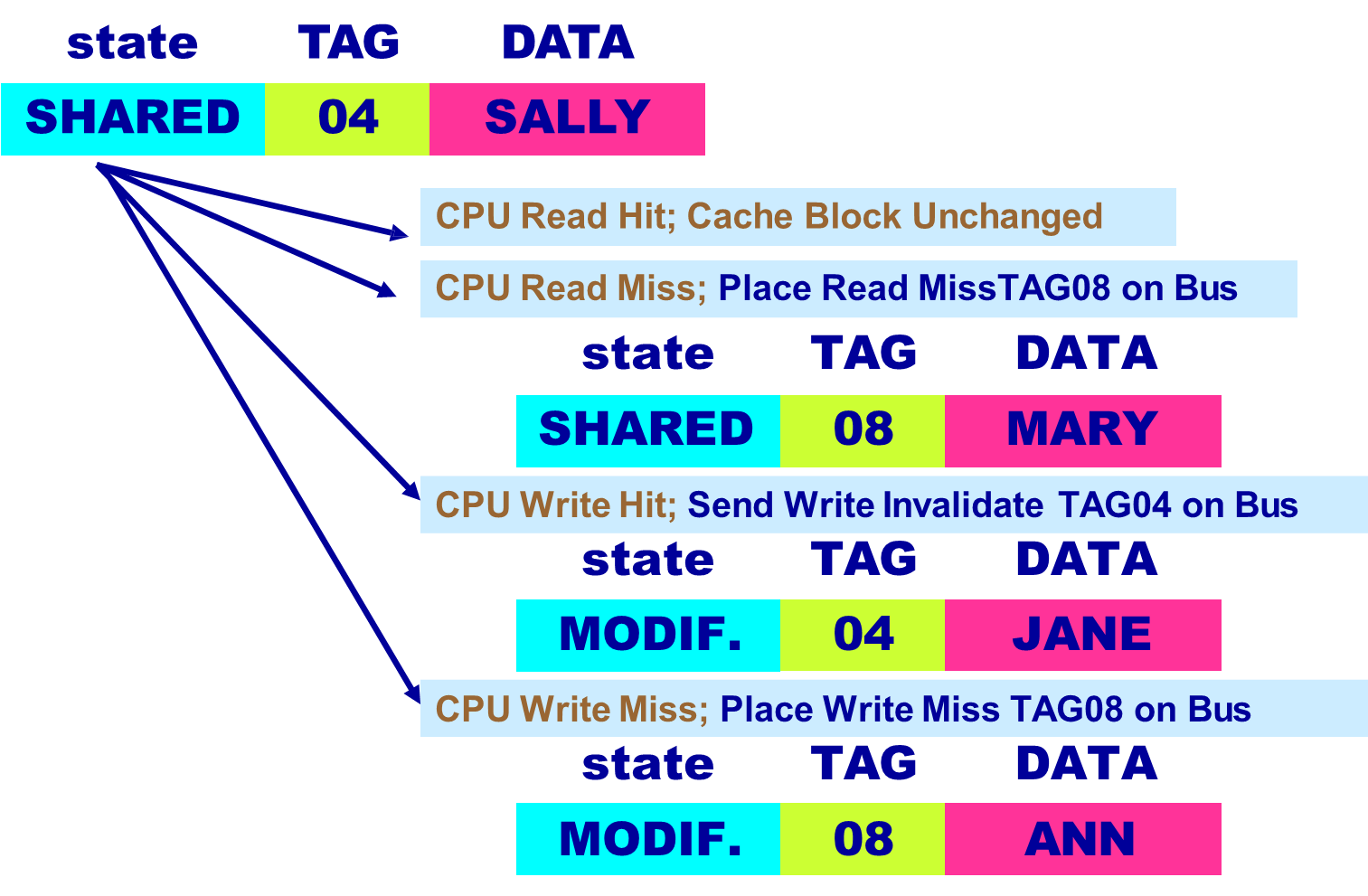

MESI Snooping Protocol

A refinement of the MSI protocol is MESI, which introduces a fourth state to enhance performance: Exclusive (E). The MESI states are defined as:

- Modified (M): The block is dirty, held exclusively, and writeable.

- Exclusive (E): The block is clean, held exclusively by one cache, and has not been modified. It allows write operations without sending an invalidation message.

- Shared (S): The block is clean and may exist in multiple caches.

- Invalid (I): The block is no longer valid.

The introduction of the Exclusive state allows caches to perform silent write-backs without generating unnecessary bus traffic, which optimizes the performance of read-modify-write sequences where the block is not actively shared yet.

One key advantage of the MESI protocol is that when a processor writes to a block in the Exclusive state, it does not need to issue an invalidation signal on the bus. This optimization reduces unnecessary communication overhead since no other cache holds a copy of that block. However, when a write occurs to a block in the Shared state—where multiple caches may hold copies—the protocol requires all other caches to invalidate their copies before the writing processor updates its local cache. This ensures that only one writable copy exists at a time, maintaining coherence while minimizing bus traffic.

The Invalid state signifies that the cache block is no longer valid and must be fetched from memory or another cache upon the next access. This detailed state management allows MESI to balance performance and coherence overhead efficiently.

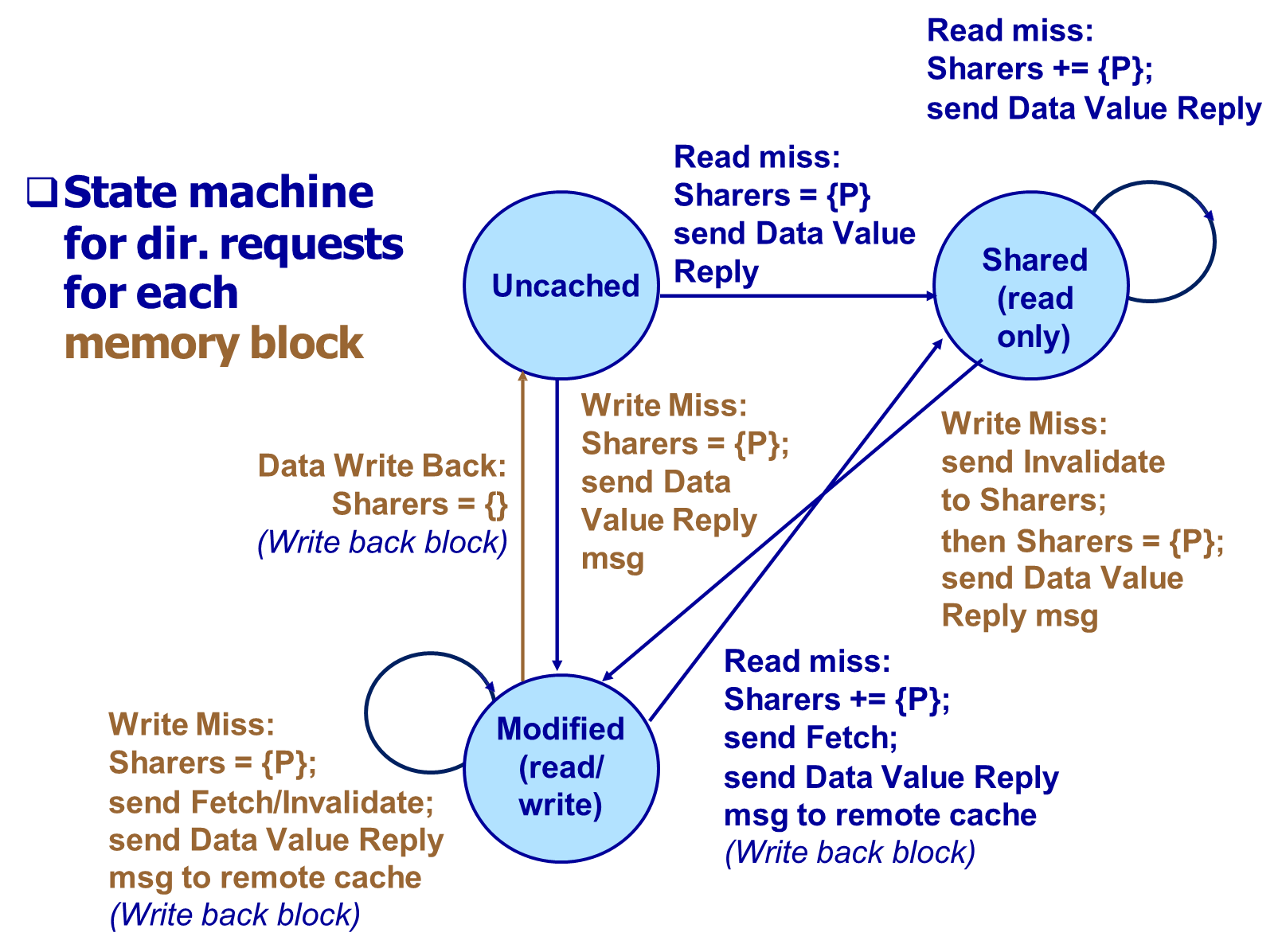

Directory-based Protocol

In directory-based protocols, each memory block is associated with a directory entry that tracks its coherence state and sharer information. The directory is either centralized (as in SMPs) or distributed across memory nodes (as in DSM). Despite being distributed, the state of each block is tracked by a single home node, where the memory block physically resides.

Each block can exist in one of three states:

- Uncached (U): The block is not present in any processor’s cache, and the main memory holds the most recent value.

- Shared (S): One or more processors cache a clean (unmodified) copy of the block, and the memory is still up to date.

- Modified (M): Only one processor holds a copy of the block, which it has modified; the main memory is now stale.

The directory entry typically includes a bit vector that identifies which processors cache the block and which processor is the owner in the Modified state.

When a processor initiates a memory operation, the directory at the block’s home node processes the request and updates the block’s state accordingly.

- In the Uncached (U) state, a read miss triggers a data reply from the home memory to the requesting processor, which then becomes a sharer, and the block transitions to the Shared (S) state. A write miss causes the block to transition to the Modified (M) state, with the requester becoming the sole owner.

- In the Shared (S) state, additional read misses add new sharers without changing the state. However, a write miss requires invalidating all other sharers via explicit invalidate messages. The requester becomes the new owner, and the block transitions to the Modified state.

- In the Modified (M) state, a read miss from another processor triggers a Fetch request to the owner, who then sends the updated block back to the home memory (Data Write Back), and both owner and requester become sharers; the block transitions to the Shared state. If the owner is evicting the block, it sends a Data Write Back to home, transitioning the state to Uncached. A write miss to a block in Modified state triggers a Fetch and Invalidate to the old owner, who transfers the block to the requester, who then becomes the new owner.

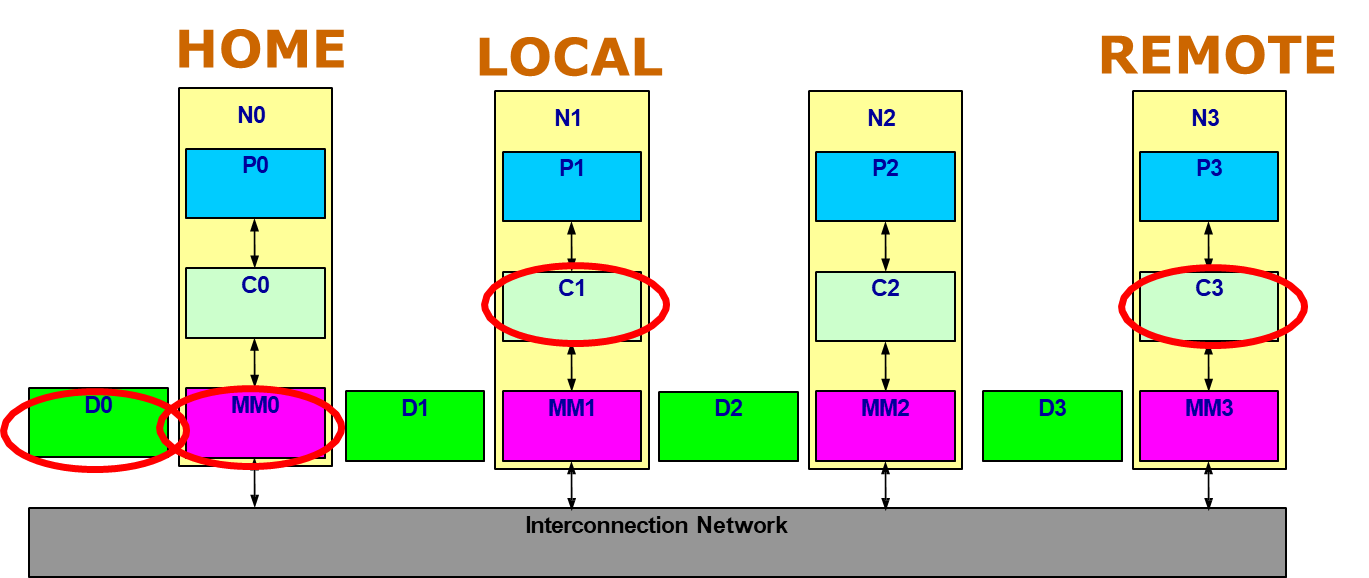

These transactions involve three roles:

- The Home node, which owns the physical memory and the directory.

- The Local node, which initiates the memory request.

- The Remote node, which may cache a copy of the block.

While any node can be both the home and local node simultaneously, the protocol must differentiate between intra-node and inter-node communication, ensuring that coherence is preserved across the system regardless of where requests originate.

Directory Protocol Messages

The directory protocol relies on a set of messages to maintain coherence and facilitate communication between nodes. These messages include:

| Message Type | Source | Destination | Msg Content | Description |

|---|---|---|---|---|

| Read Miss | Local Cache | Home Directory | P, Add | Processor P has a read miss at address Add. This miss request is sent by the local cache to the home directory and to make P as read sharer. |

| Data Value Reply | Home Directory | Local Cache | Data | Return a value from the Home memory back to the requesting Local Node. |

| Write Hit | Local Cache | Home Directory | P, Add | Processor P has a write hit at address Add. This message is sent by the local cache to the home directory to make P as exclusive owner. |

| Write Miss | Local Cache | Home Directory | P, Add | Processor P has a write miss at address Add. This message is sent by the local cache to the home directory to make P as exclusive owner. As a response, - it will follow a Data Value Reply message from the Home memory back to the requesting Local Node; - then, the Home Directory will send an Invalidate message to all sharers. |

| Invalidate | Home Directory | Remote Cache | Add | Invalidate a shared copy of data at address Add in the remote cache |

| Invalidate | Local Cache | Home Directory | Add | Request to home to send an Invalidate message to all remote caches that are caching the block at address Add. |

| Fetch | Home Directory | Remote Cache | Add | Fetch request sent from Home at address Add to the remote cache (owner of the modified block) to get back the most recent copy of the block; Then, the owner in the remote cache will send back data to its Home directory (through Data Write Back); Block state in Remote cache changes from Modified to Shared. Block state in Home directory changes from Modified to Shared. |

| Fetch/Invalidate | Home Directory | Remote Cache | Add | Fetch request sent from Home at address Add to the remote cache (owner of the modified block) to get back the most recent copy of the block; Then, the owner in the remote cache will send back data to its Home directory (through Data Write Back); Invalidate the block in the remote cache. Block state in Home directory stays Modified – but owner is changed! |

| Data Write Back | Remote Cache | Home Directory | Data, Add | Write-back a data value for address Add from Remote cache owner to the Home memory (fetch and fetch/invalidate response). Data write-back occurs for two reasons:1. When a block is replaced in a cache and must be written back to its home memory; 2. In reply to either a fetch or a fetch/invalidate message from the Home. |

Example of Directory-Based Protocol

Consider a directory-based protocol for a distributed shared memory system with 4 Nodes (N0, N1, N2, N3) where we consider the block B1 in the directory of N1:

Consider the following sequence of operations and write the sequence of messages sent among the nodes and the final coherence state of the block B1 in the home directory:

- Read Miss on B1 from node N2

- Home node: N1

- Local node: N2

- Remote node: N1

- Messages:

- N2 sends a Read Miss to N1.

- N1 responds with a Data Value Reply to N2.

- N1 updates its directory entry for B1 to reflect that N2 is now a sharer.

- Final State:

- Write Hit on B1 from node N2

- Home node: N1

- Local node: N2

- Remote node: N1, N2

- Messages:

- N2 sends a Write Hit to N1.

- N1 updates its directory entry for B1 to reflect that N2 is now the exclusive owner.

- Final State:

- Write Miss on B1 from node N0

- Home node: N1

- Local node: N0

- Remote node: N1

- Messages:

- N0 sends a Write Miss to N1.

- N1 sends an Invalidate message to N2 (the only sharer).

- N2 invalidates its copy of B1.

- N1 responds with a Data Value Reply to N0.

- N1 updates its directory entry for B1 to reflect that N0 is now the exclusive owner.

- Final State: