Definition

Identification is the phase in which the forensic analyst identifies the evidence, the devices, and the data that are relevant to the case. This phase is crucial because it determines the success of the entire investigation. The identification phase is the second phase of the forensic process, following the acquisition phase.

In the identification phase, it is essential to apply the scientific method to the evidence. This involves not only using the right tools, but also ensuring that the logic behind the analysis is clear and that the results can be validated. The analyst should be able to perform the same analysis manually, if needed. It is crucial to document the experiment in a way that another expert can replicate it and achieve the same results. Therefore, the experiments must be repeatable, allowing any other expert to perform them on a cloned image and obtain identical outcomes.

To achieve this level of repeatability, it is necessary to use open-source and preferably free analysis software that is well-documented and follows a clear logic. Proprietary or restricted tools, intended only for law enforcement use, are not suitable for this purpose as they lack repeatability and require specific environments to function properly.

Identification Toolset

The most important tool in the identification phase is the operating system, and Linux is the best choice for this task. Linux has extensive native file system support (it can manage all the most common file systems, like NTFS, FAT, EXTx, etc.), and it has native support for hot swapping drives and devices, mounting images, etc. Moreover, Linux can be used as a host for virtualization of a set of Windows machines with different versions, networked with the Linux host and using Samba (or other network protocols) to share drives and images between the host and the guests.

When it comes to the identification phase in digital forensics, Windows is not the ideal choice due to its inherent limitations. Windows has a tendency to tamper with drives and modify evidence, as it writes metadata such as access time whenever a file is opened. Additionally, Windows lacks native support for image handling, hot swapping of drives, and non-Windows file systems like EXTx cannot be mounted.

To overcome these limitations, a recommended approach is to use Linux as the host operating system and run Windows as a guest in a virtual environment. This allows for working with drive images on Linux, mounting them in a read-only mode, and exporting them to Windows using Samba. By doing so, specific Windows tools like EnCase or FTK can be utilized for analysis.

However, there may be situations where using Samba is not feasible. In such cases, the image can be mounted as a read-only loop device under Linux, and the “non-persistent” mode of VMWare can be employed. These measures ensure that the image remains intact and prevents any unintentional modifications during the analysis process.

Recovery of deleted data

One of the most common task performed during the identification phase is the recovery of a deleted file. In many cases, information or data of interest has been (voluntarily or involuntarily) deleted, and the forensic analyst must recover it to proceed with the investigation. A file can be deleted in different ways, by simply deleting the file entry in the file system, by formatting or repartitioning the drive, or by damaging the drive or having bad blocks.

File System (Unix)

The Unix filesystem is a logical method of organizing and storing large amounts of information in a way that makes it easy to manage. Here are some key features:

- Hierarchical Structure: The Unix filesystem is a tree-like structure that starts with a single directory called the root directory, denoted by a forward slash (

/) character. All other files and directories are “descendants” of root.- Files and Directories: All data in Unix is organized into files. All files are organized into directories. These directories are organized into a tree-like structure called the filesystem.

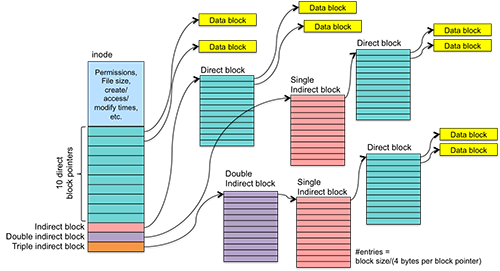

- Inodes: Each file and directory in Unix has a unique identifier, typically called an inode. Inodes contain both the file and its metadata (owner, permissions, time of last access, etc., but no name).

- Hard Links: Multiple names in the filesystem may refer to the same file, a feature termed a hard link.

- File Types: The original Unix filesystem supported three types of files: ordinary files, directories, and “special files”, also termed device files. BSD and System V each added a file type to be used for interprocess communication.

- Symbolic Links: Unix filesystem also supports symbolic links, which are pointers to other files or directories. This allows for flexible organization of files and directories without having to physically move them around.

- Permissions: Unix filesystem uses a set of permissions to control access to files and directories. Each file and directory has an owner and a group associated with it, and permissions can be set to allow or restrict access to these entities.

Overall, the Unix filesystem is a robust and flexible system that has been used for decades and continues to be the foundation for many modern operating systems.

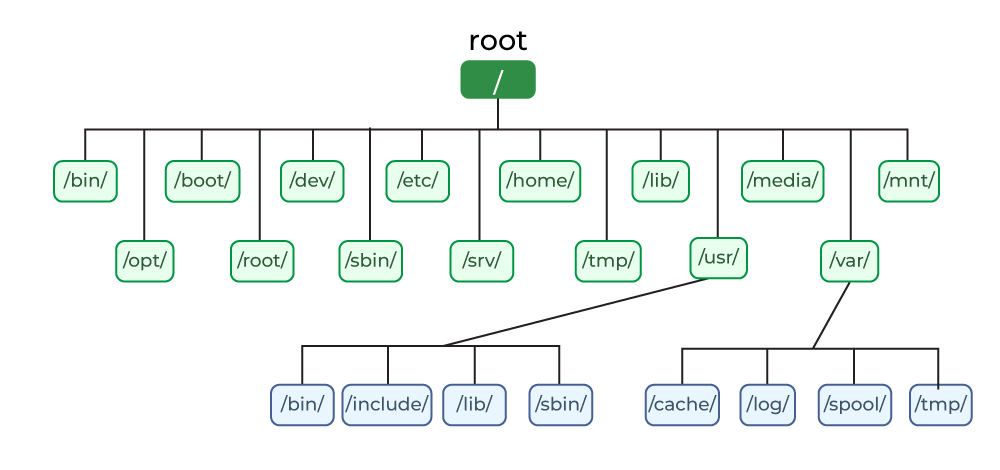

The Linux filesystem is based on the Unix filesystem, with some modifications and enhancements: the root directory is

/, and inside it, there are directories like/bin,/etc,/home,/lib,/mnt,/opt,/proc,/root,/sbin,/tmp,/usr,/var, and/dev, each one with a specific purpose.

When a file is deleted, the file entry in the file system is marked as “deleted,” but the actual blocks allocated to the file are not immediately overwritten. The file entry will be removed when the file system structure is rewritten or rebalanced, which can happen at a random time. Until this occurs, metadata about the file can still be found. There are several possible scenarios of what is possible to have after a file is deleted:

- Both the file entry and the metadata are still present, and the file can be recovered.

- The file entry is deleted, but the metadata is still present, and the file can be recovered.

- The file entry is present, but the metadata is deleted, and the file cannot be recovered.

- Both the file entry and the metadata are deleted, and the file cannot be recovered.

Furthermore, the actual blocks allocated to the file are not immediately overwritten either. They will be overwritten when the file system decides to reuse those blocks for storing new data. Until this happens, the original blocks containing the file’s data can still be retrieved from the disk.

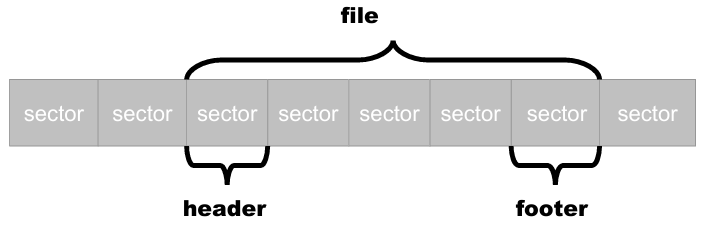

On the disk, each file entry is composed of a set of clusters, with each cluster consisting of a set of sectors. The file itself is made up of valid sectors where the actual data is stored, as well as slack sectors that mark the end of the allocation. In the slack space, fragments of deleted data can accumulate, and these fragments can be recovered using a technique called file carving.

Definition

The file carving technique is used to recover deleted files from a disk, by scanning the entire drive as a single bitstream, locating headers and footers of interesting filetypes, and extracting the data in between. If the file is not too fragmented, it can be recovered by this technique. The type of file can be determined by the information stored in the header and footer of the file.

Fragmentation, encryption and compression are true issues for file carving. On modern large drives, fragmentation is not common, and most files are 2-fragmented. Encryption and compression can be detected by the file carving tool, but they can make the recovery of the file more difficult.

There are many free software tools for data recovery that can be used to recover deleted files from a disk. Some of the most common tools are: TSK & Autopsy (Data recovery under Linux), Foremost (file recovery through file carving), gpart, testdisk (partition recovery), and photorec (self-explaining).

Antiforensic Techniques

Definition

Antiforensics are techniques that aim to create confusion in the analyst, to lead them off track, or to defeat tools and techniques used by analysts.

Antiforensic techniques can be classified into two categories: transient antiforensics and definitive antiforensics. Transient antiforensics aim to create confusion and mislead analysts during the identification phase of an investigation. These techniques can be defeated if detected. On the other hand, definitive antiforensics are more destructive in nature. They are designed to destroy evidence, make it impossible to acquire, or tamper with its reliability. Definitive antiforensics pose a greater challenge for forensic analysts as they can permanently hinder the investigation process.

During the investigation process, antiforensic techniques can exploit two critical failure points. The first is during the acquisition phase, where evidence is acquired and cloned. Definitive antiforensics can be employed to destroy the evidence or make it impossible to acquire. The second failure point is during the identification phase, where evidence is analyzed. Transient antiforensics can be used to create confusion in the analyst and divert them from the correct path of investigation. It is crucial for forensic analysts to be aware of these techniques and take appropriate measures to counteract them.

| Antiforensic Techniques | Type | Description |

|---|---|---|

| Definitive | Modify events by making them appear separated, or close, randomizing them or moving them completely out of scope. | |

| Definitive | Secure deletion, wiping unallocated space, encryption, or virtual machine usage. | |

| Definitive | Inject malware in a process memory space, giving the attacker control without writing anything to disk. | |

| Transient | Place data where there’s no reason to look for them, in particular inside filesystem metadata. | |

| Transient | Inject stuff in the logs to make the analyst’s scripts fail, or even to exploit them. | |

| Transient | Partitions not correctly aligned, adding multiple extended partitions, or generating a high number of logical partitions in an extended. |

Timeline Tampering

Timeline tampering is a crucial antiforensic technique that involves manipulating timestamps to create a false timeline of events. This method allows attackers to alter the timestamps associated with file modifications, access, and changes, thereby making these events appear separated, closer together, or completely out of scope.

Forensic analysis tools typically rely on MAC(E) values (Modified, Accessed, Changed, and Entry Modified (specific to NTFS)) to construct timelines. Attackers can deceive forensic investigators by modifying these timestamps using tools like timestomp for MACE values or touch for MAC values.

Timeline tampering poses a significant challenge for forensic investigations. Once timestamps are altered, the original data is either destroyed or modified, making the changes difficult to detect. This manipulation can obscure the true sequence of events, complicating the accurate reconstruction of incident timelines and potentially hindering the overall forensic analysis process.

Countering File Recovery

Countering file recovery is an essential antiforensic technique that aims to prevent the retrieval of deleted files. When files are deleted, data remnants may still exist in the form of unallocated sectors on the disk. To counter file recovery, several measures can be taken.

One approach is secure deletion, which involves using reliable tools to perform thorough data deletion, ensuring that the remnants of the deleted files are overwritten and irretrievable. Another method is wiping unallocated space, which involves overwriting the unused sectors on the disk to eliminate any traces of deleted files.

Encryption is another effective technique for countering file recovery. By encrypting files before deletion, even if remnants are recovered, they will be unreadable without the decryption key. This adds an extra layer of protection to sensitive data. Additionally, the use of virtual machines can help prevent file recovery. By running applications and performing file operations within a virtual machine environment, any remnants left on the host system will be isolated and inaccessible.

Fileless Attacks

Fileless attacks are definitive antiforensic techniques that can be used to inject malware in a process memory space, giving the attacker control without writing anything to disk. In this way, the attacker can add threads, execute commands, or perform other malicious activities without leaving traces on the disk. So, when the machine is shut down, evidence is lost.

The only hope to recover the evidence is in-memory forensics, using tools like memdump or volatility to analyze the memory of the machine and recover the traces of the malware.

Filesystem Insertion And Subversion Technologies

Filesystem insertion and subversion technologies are transient antiforensic techniques that can be used to place data where there’s no reason to look for them, in particular inside filesystem metadata. By placing data in the filesystem metadata, the attacker can hide the data from the forensic analyst, and make it difficult to find.

One of the most common techniques is to place data in the padding and metadata structures of the filesystem that are ignored by forensic tools. By using this technique, the attacker can store up to 1MB of data on a typical filesystem, without being detected by the forensic tools. Other techniques include writing data in bad block inodes, adding a fake EXT3 journal in an EXT2 partition, or using directory inodes to store data (where the attacker can store an unlimited amount of data); even the partition table can be modified to store data that can be recovered only by using specific tools.

Log Analysis

Log analysis is a type of transient antiforensic technique that involves the manipulation of log files to disrupt the functionality of the analyst’s scripts. By injecting misleading or malicious content into log files, attackers can cause the scripts to fail, particularly those that rely on regular expressions for parsing and extracting information from logs.

The purpose of log analysis as an antiforensic technique is twofold. Firstly, it aims to create confusion and hinder the effectiveness of the analyst’s investigation by introducing false or misleading information into the logs. This can lead the analyst down the wrong path or divert their attention away from the actual events being investigated. Secondly, log analysis can be used as a means of exploiting the scripts and tools used by analysts. By injecting specially crafted content into the logs, attackers can take advantage of vulnerabilities or weaknesses in the scripts, potentially gaining unauthorized access or executing arbitrary code within the analyst’s environment.

It is important for forensic analysts to be aware of the potential for log analysis as an antiforensic technique and take appropriate measures to mitigate its impact. This may include implementing robust log monitoring and analysis processes, using secure and validated log analysis tools, and regularly updating and patching the scripts and systems used for log analysis.

Partition Table Tricks

Partition table tricks are transient antiforensic techniques that involve modifying the partition table of a disk to hide data from forensic analysts.

One technique is creating partitions that are not correctly aligned. While some partition restore tools can read them, they may go unnoticed by forensic analysts. Another method is adding multiple extended partitions to the disk. While Windows and Linux can handle them easily, many forensic tools struggle with this. Additionally, generating a high number of logical partitions within an extended partition can cause forensic tools to crash or fail to read the disk.