Our exploration of Artificial Intelligence began with agents that solve problems through search. This paradigm involves defining a state space and identifying a sequence of actions that navigate from an initial state to a goal state. The selection of an appropriate search algorithm is contingent upon the problem’s characteristics. This classification can be understood by considering the nature of the solution required and the costs associated with actions.

Some problems require only the identification of a goal state, where the path taken to reach it is irrelevant. These are frequently modeled as Constraint Satisfaction Problems (CSPs), exemplified by the 8-queens problem or complex scheduling. For such problems, algorithms like Hill Climbing or Depth-First Search (DFS) are often employed. In this context, evaluation functions or costs are typically associated with the states themselves, such as a heuristic estimating the number of conflicts in a given configuration. Conversely, many problems require finding a specific path to a solution, where the sequence of actions is the critical output. This is common in robotics path planning or solving the 8-puzzle.

When a path is required, a further distinction is made based on action costs. If actions have varying costs, the objective is typically to find the optimal path, meaning the one with the lowest cumulative cost. Algorithms like *[[2.3 - Informed Search Strategies#a-search|A]]**, Uniform Cost Search, and Best-First Greedy Search are designed for this, as they explicitly incorporate the costs associated with actions (state transitions). If, however, all actions have uniform (or no) cost, the objective simplifies to finding any valid path, often the shortest in terms of the number of steps. Breadth-First Search (BFS), Depth-First Search (DFS), and Iterative Deepening Search (IDS) are standard algorithms for this class of problem.

Parallel to the choice of algorithm is the crucial decision of how to represent a state. We have observed a progression in the expressive power of state representations.

- The simplest form, Atomic States, treats a state as an indivisible entity, permitting only basic equality or inequality comparisons.

- A more sophisticated approach uses Factored States, which represent a state as a collection of variable-value assignments (e.g.,

Location=Arad,Weather=Sunny). This structure is fundamental to CSPs and allows for more detailed analysis of a state’s properties. - We now transition to Structured States, the most expressive representation. Here, a state is described as a complex collection of objects and the explicit relationships between them. Operating on such states requires moving beyond simple comparisons to complex processes like inference and reasoning, which form the foundation of logical agents.

The Logical Agent

A logical agent represents a paradigm shift, designing an agent whose state representation captures the fundamental truths about its world. This type of agent utilizes formal logic to represent its knowledge of the world, its states, and the effects of its actions. It then employs logical reasoning, or inference, to exploit this knowledge and determine its behavior. To achieve this, the agent uses logical sentences, which are well-formed formulas expressed in a formal language such as Propositional Logic (PL) or the more expressive First-Order Logic (FOL).

The Knowledge Base (KB)

The central component of a logical agent is its Knowledge Base (KB).

Definition

A Knowledge Base (KB) is defined as a set of logical sentences that encapsulate the agent’s knowledge.

This architecture embodies a declarative approach to agent design. In contrast to an imperative approach (where behavior is programmed step-by-step), a declarative agent is simply informed, or TELLed, what it needs to know. An internal, general-purpose reasoning mechanism then infers the appropriate course of action.

Two fundamental operations manipulate the KB.

- The

TELLoperation is used to add a new sentence, or piece of knowledge, to the KB. - The

ASKoperation is used to query the KB, determining if a particular sentence is entailed (i.e., logically follows) from the knowledge the KB already contains. ThisASKoperation inherently implies a reasoning or inference process.

The logic programming language Prolog provides a practical illustration of this KB structure.

% Using TELL, we add facts and rules to the KB:

male(yasin).

female(ruba).

male(yusuf).

% rules

person(X) :- female(X). % A person is a female.

person(X) :- male(X). % A person is a male.

mortal(X) :- person(X). % All persons are mortal.

% Using ASK, we can query the KB:

?- mortal(yasin). % Is Yasin mortal?

% Prolog would answer: true.

?- mortal(ziggy). % Is Ziggy mortal?

% Prolog would answer: false (based on the current KB). Facts (e.g., male(yasin)., female(ruba).) and rules (e.g., person(X) :- female(X)., mortal(X) :- person(X).) are added to the KB using the TELL mechanism. Subsequently, the ASK mechanism can be used to pose queries, such as ?- mortal(yasin).. The Prolog engine, acting as the inference mechanism, would evaluate this query against its KB and return true. A query like ?- mortal(ziggy). would return false, as it cannot be proven from the current knowledge.

Architecture and Advantages of Logical Agents

A logical agent functions through a continuous cycle: it perceives its environment, updates its internal knowledge, reasons about the world, and then acts.

function KB-AGENT(percept) returns an action

static: KB, a knowledge base

t, a counter for time, initially 0

TELL(KB, MAKE-PERCEPT-SENTENCE(percept, t))

action ← ASK(KB, MAKE-ACTION-QUERY(t))

TELL(KB, MAKE-ACTION-SENTENCE(action, t))

t ← t + 1

return action

The KB-AGENT function illustrates this loop: at each time step TELLs its KB a new sentence representing its current percept. It then ASKs the KB what action it should take, using a query formulated for time TELLs the KB that it has performed that action, increments the time step, and returns the action.

This architecture reveals a powerful and fundamental design principle: the separation of knowledge from the reasoning mechanism.

| Component | Description | Nature |

|---|---|---|

| Knowledge Base | Contains domain-specific facts and rules about the world. | Domain-Specific |

| Inference Engine | A general-purpose algorithm that can deduce new facts from the KB. | Generic Code |

This separation means a single, robust inference engine can be developed once and then applied to myriad different domains simply by providing a new, domain-specific KB.

This declarative, knowledge-based approach offers several significant advantages over the problem-solving agents discussed previously. It allows for a High-Level Description of the agent, defining it by what it knows rather than the specific implementation of its search algorithm. This enhances modularity and reusability. It also provides an Economy of Representation; the agent only needs to store a core set of facts and rules, as it can derive a vast amount of implicit information through inference rather than requiring every possible fact to be explicitly stored. Finally, this design affords profound Flexibility. A search-based agent is typically configured to answer one specific question (“What is the path from

Representing the World with Formal Logic

To construct a Knowledge Base (KB) capable of reasoning, an agent requires a formal language. Logic provides the syntax and semantics for this language, enabling the precise representation of complex knowledge about the world. This representation is built from fundamental components. The world is considered to be populated by objects, which are the entities of interest (e.g., people, houses, numbers, theoretical concepts). Statements about these objects, known as sentences, are assertions that can be evaluated as either true or false.

Sentences come in two forms.

- Atomic sentences are the most basic, indivisible statements that can be made about the world (e.g., “The engine is getting gas”).

- Complex sentences are then constructed by combining one or more atomic sentences using logical connectives (e.g., AND (

), OR ( ), NOT ( ), IMPLIES ( )).

Within the KB, we distinguish between facts and rules.

- Facts are specific atomic sentences asserted to be true, representing ground-level knowledge (e.g.,

M(Lulu, Fifi), or “Lulu is the mother of Fifi”). - Rules, by contrast, are general statements about how the world works, often expressed as implications, that describe relationships and constraints (e.g., “If

is the mother of , then is a parent of ”).

Example 1: Propositional Logic (PL)

The choice of logical language dictates the expressive power of the KB. Propositional Logic (PL) is a simple logic where the fundamental unit is the proposition, or atomic sentence. Each atomic sentence is treated as an indivisible symbol (e.g.,

A,B,C), and the logic describes how these symbols can be combined.Consider a simple car diagnostic system with the following rules:

- IF the engine is getting gas, AND the engine will turn over, THEN the problem is spark plugs.

- IF the engine does not turn over, AND the lights do not come on, THEN the problem is battery or cables.

- IF the engine does not turn over, AND the lights do not come on, THEN the problem is the starter motor.

- IF there is gas in the fuel tank, AND there is gas in the carburetor, THEN the engine is getting gas.

In PL, we would assign a unique symbol to each atomic proposition:

A: “the engine is getting gas”B: “the engine will turn over”C: “the problem is spark plugs”D: “the lights do not come on”E: “the problem is battery or cables”The rules are then translated into logical sentences:

While useful, PL is fundamentally limited. It has a “flat” view of the world, as it cannot represent or generalize over objects. The symbol

Ahas no internal structure; it simply stands for the entire sentence. We cannot use PL to express a rule like “If any component is faulty, the system is not operational,” as it lacks the machinery to talk about “any component.”

Example 2: First-Order Logic (FOL)

First-Order Logic (FOL), also known as predicate logic, extends PL to overcome these limitations. FOL has a richer ontology, allowing it to explicitly represent objects (constants like

Lulu,Fifi), variables (, ) that range over objects, and predicates (relations that hold between objects, such as for ” is a parent of ” or for ” is the mother of ”). Let’s re-examine a set of statements using the superior expressive power of FOL:

- If

is a parent of , then is older than . - If

is the mother of , then is a parent of . - Lulu is the mother of Fifi.

In FOL, these statements are formalized as:

The key additions here are the quantifiers, such as the universal quantifier

(“for all”). This allows FOL to express general rules that apply to all objects in the domain, a capability entirely absent from PL. Sentence 1, for instance, concisely captures a truth about all parent-child relationships.

The Power of Logical Inference

The true utility of a knowledge-based agent lies not just in its ability to store facts but in its capacity for inference—the ability to derive new knowledge from what is already known. Using the FOL sentences above, an agent can deduce a new fact, O(Lulu, Fifi) (“Lulu is older than Fifi”), which was never explicitly told to it.

This conclusion is reached through a formal, step-by-step deduction process:

- The KB contains the rule

. The agent can apply Universal Instantiation by substituting x=Luluandy=Fifi, creating the specific instance:. - The KB also contains the fact

. By applying the inference rule Modus Ponens to this fact and the instantiated rule from step 1, the agent derives the new fact: . - The KB also contains the rule

. The agent again applies Universal Instantiation with x=Luluandy=Fifito get:. - Finally, the agent applies Modus Ponens to the derived fact

(from step 2) and the instantiated rule from step 3, concluding the new fact: .

This ability to generate new, correct knowledge from existing premises is the foundation of logical reasoning and a cornerstone of intelligent systems.

The Enduring Relevance of Symbolic Reasoning

In the contemporary landscape of AI, often dominated by deep learning and large-scale neural networks, it is reasonable to question the relevance of classical, symbolic AI. However, logical agents are far from a historical artifact. A growing consensus, articulated by leading researchers like Demis Hassabis (DeepMind co-founder and 2024 Nobel laureate), suggests that true, general intelligence will likely require a synthesis of both paradigms: the subsymbolic pattern recognition of deep learning and the structured, symbolic reasoning of classical AI.

Quote

“You can think about deep learning as it currently is today as the equivalent in the brain to our sensory cortices: our visual cortex or auditory cortex.

“But, of course, true intelligence is a lot more than just that, you have to recombine it into higher-level thinking and symbolic reasoning, a lot of the things classical AI tried to deal with in the 80s.”

Demis Hassabis

Hassabis offers a compelling analogy, suggesting that deep learning, in its current form, is analogous to the brain’s sensory cortices (e.g., the visual or auditory cortex). It excels at the “perception layer” of intelligence, processing vast amounts of raw, unstructured data to identify patterns and features. However, intelligence is far more than just perception. It requires higher-level thinking—the ability to abstract these patterns into symbolic concepts, build models of the world, plan, and execute multi-step reasoning. This is precisely the domain of symbolic logic. Logical agents provide the formal framework for this essential higher-level cognition, manipulating the symbolic representations that deep learning systems might help extract from the sensory world.

Fundamentals of Formal Logic

To engineer agents capable of robust logical reasoning, we must first establish the formal structure of the languages they employ.

Definition

Logics are formal languages, meticulously designed for representing information in a way that permits unambiguous conclusions to be drawn through inferential processes.

Any such formal logic, whether it be Propositional or First-Order, is characterized by two essential and distinct components: its syntax and its semantics.

Syntax: The Structure of Sentences

Definition

The syntax of a logic comprises the complete set of rules that meticulously define what constitutes a legally formed sentence.

It is, in effect, the “grammar” of the language, specifying the alphabet of permissible symbols (e.g., constants, variables, connectives, quantifiers) and the formation rules for combining these symbols into legitimate expressions, known as well-formed formulas (WFFs). It is critical to understand that syntax is concerned only with the form and structure of sentences, not with their meaning or their correspondence to any external reality.

Example

For instance, in the familiar language of arithmetic, the string

is a syntactically valid sentence because it adheres to the established formation rules (an expression, followed by a relational operator, followed by another expression). In contrast, the string is syntactically invalid; it is a meaningless jumble of symbols that violates the grammatical rules, even though it uses the same alphabet.

Semantics: The Meaning and Truth of Sentences

Definition

The semantics of a logic, conversely, is what imbues these formal sentences with meaning. It achieves this by defining the truth conditions for every well-formed formula.

Semantics acts as the bridge connecting the abstract symbols and sentences of the language to a conceptual or actual state of affairs. This is formally handled by defining an interpretation or model.

Definition

A model (referred to in the conceptual analogy as a “world”) represents a specific, possible state of affairs.

The semantics of the logic specifies how to evaluate the truth of any given sentence relative to a particular model.

Example

For example, the syntactically valid sentence

does not have a fixed truth value on its own. Its truth is contingent upon the semantic interpretation of the variables and . In a model where the interpretation assigns and , the sentence evaluates to true. However, in a different model where and , the same sentence evaluates to false. Semantics, therefore, provides the formal machinery for determining truth.

A Conceptual Model: Syntaxland vs. Semanticsland

This fundamental distinction between structure and meaning can be visualized.

- Syntaxland is the abstract realm containing all possible strings of symbols that can be generated by the language’s alphabet. The rules of syntax act as a gatekeeper, partitioning this space into two sets: the well-formed formulas (WFFs) and the syntactically invalid strings.

- Semanticsland is a conceptual universe populated by every possible “world,” or model. Each model in this universe represents a complete and distinct description of a possible reality. Semantics is the formal mapping that connects these two domains.

For any given sentence

Propositional Logic (PL)

The simplest and most fundamental member of the classical logic family is Propositional Logic (PL), also known as sentential logic. It provides a formal language for reasoning about propositions. A proposition is formally defined as a declarative statement which must be, by assumption, either true or false. This foundational principle, which admits no third value or ambiguity, is known as the principle of bivalence.

Evaluating Propositional Logic: Pros and Cons

Propositional Logic is a foundational tool, but it’s important to understand its strengths and weaknesses.

Pros Cons Expressive for Logical Combinations: PL allows for the representation of partial, disjunctive, and negated information (e.g., “The key is in room 1 or room 2,” “The agent is not in square [1,1]”), which is difficult to express in many standard data structures or databases. Limited Expressive Power: PL is fundamentally limited because it cannot talk about objects, their properties, or the relations between them in a general way. It treats entire propositions as indivisible atoms. Compositional: The semantics of PL are compositional, meaning the truth of a complex sentence is a direct function of the truth of its sub-parts. This makes reasoning predictable and systematic. Lack of Generalization: It cannot capture general patterns concisely. For example, to state the simple rule “A square in a Wumpus World grid is breezy if an adjacent square contains a pit,” you would have to write a separate, large propositional rule for every single square on the board. This is incredibly cumbersome and fails to represent the underlying general principle. Context-Independent: The meaning of a sentence like is the same regardless of what other sentences exist in the knowledge base. This is a sharp contrast to natural language, where the meaning of “It is big” depends heavily on context.

Syntax of Propositional Logic

The syntax of PL specifies the rules for constructing legally-formed sentences, or well-formed formulas (WFFs). The language is built from two sets of symbols. The first set consists of atomic sentences, which are the most basic building blocks. These are represented by propositional symbols (e.g.,

The second set consists of five logical connectives which are used to recursively build complex sentences from simpler ones. If

| Connective | Symbol | Meaning | Description |

|---|---|---|---|

| Negation | ”not | This sentence is true if | |

| Conjunction | ” | This sentence is true only if both | |

| Disjunction | ” | This sentence is true if at least one of | |

| Implication | ” | This sentence is false only in the specific case where the antecedent ( | |

| Biconditional | ” | This sentence is true only if |

Semantics of Propositional Logic

The semantics of PL define the meaning of its sentences by providing a formal method for determining their truth value. This process relies on two core concepts: models and truth tables. In the context of PL, a model is a specific, complete assignment of a truth value (true or false) to every propositional symbol in the language’s vocabulary.

For example, if a language is defined by the symbols

, one possible model is the assignment .

Because each of the

The meaning of the logical connectives themselves is formally and exhaustively defined by truth tables. These tables specify the truth value of a complex sentence for every possible combination of truth values of its immediate components, thereby serving as the complete semantic definition of the operators.

| 0 | 0 | 1 | 0 | 0 | 1 | 1 |

| 0 | 1 | 1 | 0 | 1 | 1 | 0 |

| 1 | 0 | 0 | 0 | 1 | 0 | 0 |

| 1 | 1 | 0 | 1 | 1 | 1 | 1 |

The evaluation of any complex sentence, given a model, is a recursive, bottom-up process. The truth value of the full sentence is determined by first finding the values of its atomic propositions (as specified by the model) and then progressively applying the truth-table rules for the connectives until the entire expression is resolved to a single truth value.

For instance, given the model

, the sentence is evaluated by first resolving to , which is . Simultaneously, becomes , which is . The full sentence thus simplifies to , which evaluates to .

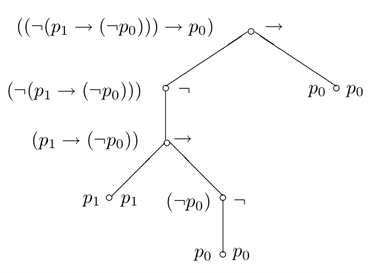

Structural Representation: Parse Trees

Logical sentences are not merely flat strings of text; they possess a definite hierarchical internal structure. This structure is best represented by a parse tree. In this representation, the leaves of the tree are the atomic proposition symbols, while the internal nodes are the logical connectives. The root of the tree corresponds to the main connective of the entire sentence.

Example

For example, the sentence

would be represented as a tree where the root is the main implication . Its right child would be the leaf , and its left child would be the root of the sub-tree for .

This tree structure is the standard computational representation for a formula, as it unambiguously defines the order of operations and is typically implemented as an object with properties like

operatorandarguments.

First-Order Logic (FOL)

The expressive constraints of Propositional Logic (PL), specifically its fundamental inability to generalize statements or refer to individual objects and their properties, render it insufficient for representing complex knowledge in many real-world domains. To surmount these limitations, we advance to First-Order Logic (FOL), also known as First-Order Predicate Calculus. This transition represents a significant shift in ontological commitment—that is, the set of assumptions the logic makes about the nature of reality.

Whereas PL assumes a “flat” world composed solely of atomic facts (propositions), FOL provides a much richer, structured, and relational view. The ontology of FOL assumes the world is populated by three core types of entities.

- Objects: Individual, distinguishable entities, such as people, houses, and colors, or abstract concepts like numbers, dates, and wars.

- Relations: Properties of objects (unary relations like

RedorRound) or the relationships that hold between a set of objects (binary relations likeBrotherOforBiggerThan). A relation can be formally understood as the set of tuples of objects for which that relation is true. - Functions: A specialized kind of relation that uniquely maps one or more objects to a single target object (e.g.,

FatherOforPlus).

This structured ontology allows FOL to represent knowledge with a naturalness and power that PL cannot achieve.

The Building Blocks of First-Order Logic

As with any formal language, FOL is defined by its syntax and semantics. The syntax dictates the rules for constructing valid sentences from a basic vocabulary of symbols. This vocabulary begins with symbols to represent the objects in our ontology. Constant Symbols are used to name specific, individual objects (e.g., KingJohn, Richard, POLIMI, 2). To refer to unspecified or general objects, we use Variables (e.g.,

The relations and functions are named by Predicate Symbols and Function Symbols, respectively. Each of these symbols has a specific arity, which denotes the number of objects (or arguments) it accepts.

For instance,

Personis a unary predicate (arity 1), whileBrotheris a binary predicate (arity 2). Similarly,Sqrtis a unary function (arity 1), mapping one object (a number) to another.

To construct complex statements, the vocabulary includes the five familiar logical connectives from PL: negation (

Syntax of Terms and Sentences

The syntactic rules of FOL use this vocabulary to build two primary types of expressions: terms and sentences.

Definition

A Term is a logical expression that refers to an object.

A term can be a simple Constant Symbol (e.g.,

Definition

A Sentence, by contrast, is an expression that asserts a claim about the world and can be evaluated as true or false.

The most basic form is the Atomic Sentence. An atomic sentence is formed in one of two ways: either by applying a Predicate Symbol to one or more terms (e.g.,

From these atomic building blocks, Complex Sentences are formed recursively by combining atomic sentences using the logical connectives, just as in Propositional Logic.

Example

For example, the sentence

is a complex sentence asserting that if the first relation holds, then the second one must also hold. This ability to construct detailed, structured statements about the properties of objects and the relationships between them is what gives First-Order Logic its significant expressive advantage.

Connecting Language to Worlds

The semantics of First-Order Logic (FOL) provide the formal mechanism for ascribing meaning to its sentences, defining the precise conditions under which a sentence is considered true or false. A key concept in FOL semantics is that a sentence, as a purely syntactic construct, possesses no inherent truth value. Its truth is established only relative to a specific model. A model serves as a formal, mathematical abstraction of a “world” or a particular state of affairs, providing the concrete context needed for semantic evaluation.

Models in First-Order Logic

A model

- The Domain (also called the Universe), denoted

, is a non-empty set containing all the individual objects that are presumed to exist in this specific world. These objects can be concrete entities (like people or houses) or abstract concepts (like numbers or colors). - The second component, the Interpretation

, is a mapping that connects the vocabulary of the formal language (its constant, predicate, and function symbols) to the actual elements within the model’s domain. The interpretation must be defined for every symbol in the language: - For each constant symbol, the interpretation

assigns one specific object from the domain . - For each predicate symbol of arity

, assigns a specific -ary relation on . This relation is formally a set of -tuples of objects from the domain for which the relation holds true. - For each function symbol of arity

, assigns a specific -ary function that maps objects from the domain to a single unique object within that same domain (i.e., ).

- For each constant symbol, the interpretation

Once a model is fully specified, the truth of an atomic sentence can be determined. An atomic sentence, such as

Example: A Model for the World of Richard and John

To illustrate these concepts, let us construct a specific model,

, for a language containing the constant symbols and ; the predicate symbols , , and ; and the function symbol . This model

might be defined with a Domain , a set of abstract objects representing two people, their corresponding legs, and perhaps other items like a crown. The Interpretation

then grounds the language’s symbols within this domain:

This model, visually represented in the provided diagram, establishes a simple world. In this world, there are two objects,

R(🦁) andJ(👑), both of whom are in therelation. Only Jis in therelation. The relation is defined as a mutual relationship holding between them. The function maps each person object to a corresponding leg object. We can now use this model to evaluate the truth of a complex sentence, such as

. The evaluation proceeds by first assessing the antecedent, . The interpretation maps to and to . We then check if the tuple is a member of the relation . According to our model, it is, so the antecedent is true. Next, we evaluate the consequent, . The interpretation maps to and to . We check if the tuple is in the relation. It is, so the consequent is also true. Finally, the entire implication is evaluated based on the truth values of its components: , which is itself true. Therefore, this particular sentence is true in this model. This dependence on the model is the core of FOL semantics. If we were to define a different model,

, where the relation was not mutual (e.g., ), the antecedent would be true, the consequent false, and the entire implication would evaluate to false.

Quantifiers: Expressing Generality in FOL

The true expressive power of First-Order Logic, which elevates it significantly above Propositional Logic, stems from its ability to make general, sweeping statements about collections of objects. This capability is realized through the use of quantifiers. The two fundamental quantifiers are the universal quantifier (

Universal Quantification (

Definition

The universal quantifier, denoted by

(an inverted ‘A’ to signify “All”), is employed to assert that a particular sentence or property holds true for every object within the model’s domain. Its syntax is

Semantically, a sentence

is true in a given model if and only if the sentence evaluates to true for every possible object in the domain that the variable can be bound to.

Consider the statement, “Everyone at POLIMI is smart.” To express this in FOL, we would use predicates such as

This sentence is read, “For every object

A frequent and critical error in logical formulation is to use conjunction (

Existential Quantification (

Definition

The existential quantifier, denoted by

, is used to assert that a statement is true for at least one object within the domain. Its syntax is

Semantically, the sentence

is true in a model if and only if there exists at least one object in the domain for which evaluates to true.

To formalize the statement, “Someone at POLIMI is smart,” we would write:

This reads, “There exists at least one object

The corresponding common mistake with

Properties of Quantifiers

Quantifiers exhibit several important properties that govern their manipulation.

When quantifiers of the same type are consecutive, their order is immaterial;

is logically equivalent to , and is equivalent to .

However, the order of mixed quantifiers is critical and fundamentally changes the meaning of a sentence.

Example

Consider the predicate

for ” loves .” The sentence asserts “There exists a single person who loves everyone.” In contrast, asserts “Everyone is loved by at least one person.” The first implies a single for all ; the second allows for a different for each .

Furthermore, quantifiers exhibit a duality relationship, analogous to De Morgan’s laws for

Equality

The equality symbol (

Equality is indispensable for defining concepts that require distinguishing between individuals.

Example

For example, the

relationship can be precisely defined by stating that two individuals, and , are siblings if and only if they share the same parents but are not the same person. This is captured formally as: This complex sentence effectively uses equality to enforce that

and are distinct individuals and that their mother and father are also distinct from each other, while sharing the same parent-child relationship with both and .

Logical Inference and Entailment

Possessing a formal language for knowledge representation, such as Propositional or First-Order Logic, is only the first step. To create an intelligent agent, we require a mechanism for reasoning with this knowledge. The central concept that formalizes the intuitive idea of drawing a valid conclusion is entailment.

Definition

Entailment is a semantic relationship, defined as follows: a sentence

is entailed by a sentence , written , if and only if is true in every model (or “possible world”) in which is true. In simpler terms, follows logically from ; ‘s truth guarantees ‘s truth.

This concept extends directly to a Knowledge Base (KB), which is a set of sentences. For the purpose of semantics, a KB can be treated as a single, large sentence formed by the conjunction (the ANDing) of all its individual sentences. Therefore, the expression

Inference Procedures: From Semantics to Syntax

A logical agent cannot practically check all possible worlds to determine entailment. It requires a concrete, finite, and computational procedure to determine what its KB entails. This procedure is known as an inference procedure or proof procedure.

Definition

An inference procedure is a syntactic algorithm that can derive new sentences from an existing set of sentences based on formal rules. If an algorithm

can derive a sentence from a knowledge base , we write this using the “turnstile” symbol: , which is read as “KB derives using procedure .”

The goal is for this syntactic derivation (

The classic Modus Ponens rule, which states that from

The ultimate goal of logical AI is thus to develop inference procedures that are both sound and complete, while also being computationally efficient.

Entailment, Validity, and Satisfiability

The core concept of entailment is deeply interconnected with two other fundamental properties of logical sentences: validity and satisfiability. These connections provide the theoretical foundation for creating practical inference algorithms.

Definition

A sentence is valid, also known as a tautology, if it is true in all possible models. Examples include

trueitself,, or the logical structure of Modus Ponens, .

The Deduction Theorem establishes a direct link between entailment and validity:

holds if and only if the single sentence is valid.

This theorem suggests that we can prove an entailment by proving the validity of a corresponding implication.

On the other hand,

Definition

a sentence is satisfiable if it is true in at least one model.

Most sentences we encounter, such as

A sentence is unsatisfiable if it is false in all possible models, with

being the canonical example.

This concept leads to the single most important principle for automated reasoning: the Refutation Theorem (or Contradiction Theorem). This theorem states that

This Refutation Theorem is profoundly significant because it provides the operational basis for proof by contradiction. Instead of the difficult task of proving that

Building a Knowledge Base

Having a powerful language like First-Order Logic is only the first step. The process of building a knowledge-based agent involves translating real-world, often informal, knowledge into the precise syntax of a formal logic. This disciplined process is known as Knowledge Engineering.

Knowledge engineering is an iterative methodology for eliciting, structuring, and representing knowledge in a knowledge base. The goal is to create a KB that is a correct, useful, and efficient model of the problem domain.

The process can be broken down into seven key steps:

- Identify the Task: Before writing any logic, the engineer must deeply understand the problem. What is the agent supposed to do? What are its inputs (percepts, queries) and desired outputs (actions, answers)? Defining the scope and objectives is a critical first step that guides all subsequent decisions.

- Assemble the Relevant Knowledge: This is the knowledge acquisition phase. The engineer gathers all relevant information about the domain. This might involve interviewing human experts, reading manuals and textbooks, observing real-world phenomena, or analyzing data. The goal is to collect the raw material—facts, rules, relationships, and processes—that will eventually populate the KB.

- Decide on a Vocabulary:

This is a crucial modeling step. The engineer must choose the fundamental building blocks of the representation: the predicates, functions, and constants.

- Constants: What are the important individual objects in the domain? (e.g.,

, , ). - Predicates: What are the key properties of objects and the relationships between them? (e.g.,

, ). - Functions: Are there relationships where one object corresponds to exactly one other object? (e.g.,

).

- Constants: What are the important individual objects in the domain? (e.g.,

- Encode General Knowledge about the Domain:

Here, the engineer writes the general rules that govern the domain. These are the axioms that are always true. This step involves creating the universally quantified sentences (the

rules) that capture the underlying principles of the world model (e.g., ). - Encode a Description of the Specific Problem Instance:

With the general rules in place, the engineer adds the specific facts that describe the current situation or problem. This involves writing the ground atomic sentences (the facts) that are true for this particular instance (e.g.,

). - Pose Queries to the Inference Procedure:

Once the KB is populated, it can be used for reasoning. The agent (or engineer) can

ASKquestions of the KB. The inference procedure (e.g., a theorem prover) will use the facts and rules to derive answers. This step tests whether the KB can produce the desired outputs. - Debug the Knowledge Base: Knowledge bases are rarely perfect on the first try. Debugging involves identifying and fixing errors. An error might be a logical contradiction, a rule that is too strong or too weak, or a missing piece of knowledge. This is often done by posing queries and examining the results. If a query returns an unexpected answer (or no answer), the engineer traces the inference process back through the rules and facts to find the source of the error. This is an iterative process of refinement.

An Exercise in Knowledge Representation

We can now apply the formal principles of knowledge engineering to a concrete problem, illustrating the process of translating natural language into both First-Order Logic and Propositional Logic.

Part 1: The Problem Statement

Our task is to build a formal knowledge base from the following natural language statements:

- A1. Those who like opera, like both music and theatre.

- A2. Alice likes music, but she does not like theatre.

- A3. Bob likes exactly the same things that Alice likes.

- A4. Bob does not like opera.

Part 2: Representation in First-Order Logic (FOL)

The knowledge engineering process begins by defining the vocabulary (the ontology) of our world. For this problem, we can identify a set of specific constants:

(for Alice), (for Bob), (for Music), (for Opera), and (for Theatre). We also need to represent the relationship between these objects, for which we define a single binary predicate: , which we interpret as “person likes thing .” With this vocabulary, we can encode each statement. The first statement, A1, is a general rule. The phrase “Those who” implies a universal statement over all people, so we introduce a variable

and the universal quantifier . The structure is a material implication ( ), asserting that if a person likes opera, then that same person must like music and theatre. This is formalized as:

The second statement, A2, is a set of specific facts about the constant

. It is a straightforward conjunction ( ) of a positive atomic sentence and a negated atomic sentence:

The third statement, A3, expresses a general rule of equivalence between Bob and Alice. The phrase “for all things” necessitates universal quantification over the domain of things, so we introduce a variable

. The assertion that Bob likes exactly the same things as Alice is stronger than a simple implication; it requires that for any given thing , Bob likes it if and only if Alice likes it. This is captured by the biconditional ( ). The resulting FOL sentence is:

Finally, statement A4 is a simple, specific fact about the constant

. It is represented as a negated ground atomic sentence:

Part 3: Translation to Propositional Logic (PL)

The translation from the expressive, quantified sentences of FOL to the simpler, ground sentences of PL is a process known as propositionalization. This translation is algorithmically possible only under the critical assumption that the domain of discourse is finite and known. In this problem, this condition is met: the domain of people is

, and the domain of things to be liked is . The process involves two steps. First is instantiation, where variables in quantified sentences are replaced by the constants from their respective domains. A universally quantified sentence (

) becomes a large conjunction of the ground instances ( ). An existentially quantified sentence ( ) would similarly become a large disjunction. Second, each unique ground atomic sentence (e.g., ) is mapped to a unique propositional symbol (e.g., ). Applying this to our FOL knowledge base, we begin with A1:

. Here, the variable ranges over the domain of people, . We thus instantiate the rule for and and conjoin the results. This yields . Mapping these ground atoms to propositional symbols gives the final PL sentence:

Next, we translate A3:

. In this case, the variable ranges over the domain of things, . The universal quantifier must therefore be unrolled into a conjunction of three instantiations, one for each thing. This gives . Mapping this to PL symbols results in:

The remaining sentences, A2 and A4, are already ground (they contain no variables), so they require only the second step of symbol mapping.

becomes , and becomes . This exercise highlights the significant trade-offs between these logics. First-Order Logic provides a powerful, concise, and general representation that captures the underlying abstract structure of the rules. Propositional Logic, while computationally simpler in some respects, requires enumerating every possible instance. This leads to a much larger, more verbose knowledge base that obscures the general principles (like A1 and A3) by burying them in a sea of specific, ground propositions.