️️️The Turing Machine is an historical model of a “computer”, simple and conceptually important under many aspects. We consider it as an automaton; then we will derive from it some important, universal properties of automatic computation. For now we consider the

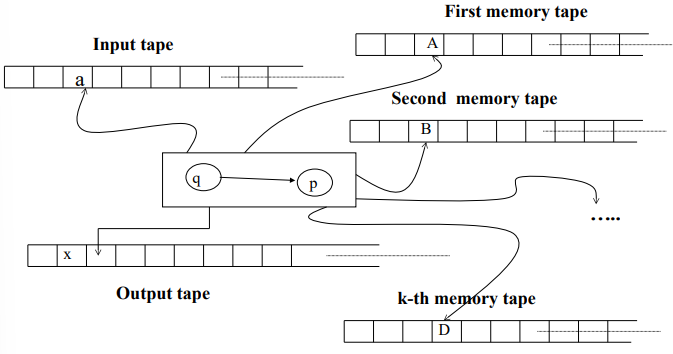

k-tape Turing Machine

The

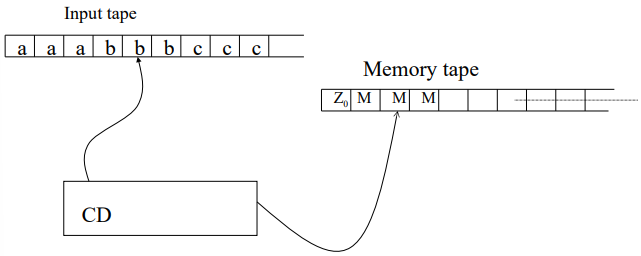

Historically, Turing Machines have been depicted with infinite sequences of cells labeled as

Each of the

Furthermore, the

The Movements of the Turing Machine

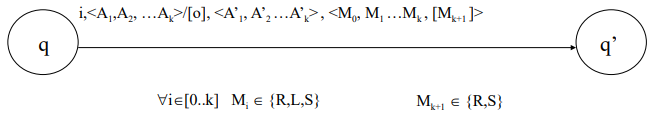

The movement of a Turing Machine, specifically in the context of a

- State Transition: The control device transitions from one state

to another state . This represents the internal computation logic that determines the next step based on the machine’s current configuration. - Writing on Memory Tapes: For each memory tape

(where ), the machine writes a new symbol in place of the symbol that was just read. This action updates the memory content of the machine. - Writing on the Output Tape: If required, the machine can also write a symbol on the output tape. This symbol is typically used to store results or intermediate outputs of the computation.

- Moving the Heads: The machine moves its reading and writing heads across the tapes. Specifically:

- The memory tape heads (one for each of the

tapes) and the input tape head can move one position to the right (denoted as ), one position to the left (denoted as ), or stay in the current position (denoted as ). - The output tape head, however, can only move right or stay in place, as output typically follows a sequential pattern.

- The memory tape heads (one for each of the

Thus, the machine’s configuration evolves through a combination of state transitions, writing symbols, and head movements. This can be formalized using a transition function,

Definition

The formal structure of this transition is:

In this expression:

represents the set of states of the control device. denotes the input alphabet. is the memory alphabet (symbols written on the memory tapes). is the output alphabet (symbols written on the output tape). The function

describes the machine’s transition by determining the new state, the symbols to be written on the memory tapes, and the directions in which the heads will move.

When the Turing Machine begins its computation, the system is initialized as follows:

- The memory tapes contain a special starting symbol

followed by an infinite sequence of blank symbols. - The output tape is initially blank.

- The heads of all tapes (input, memory, and output) are positioned at the 0th cell.

- The control device is set to its initial state

. - The input string

is placed on the input tape starting from the 0th position, followed by blanks.

The machine halts when it reaches an accepting state, denoted by

Formalization of Transitions (for k = 1)

To simplify, consider a 1-tape Turing Machine.

Definition

The transition between configurations is formalized as:

In this notation:

are the current and next states. is the current input symbol being read. represent the portions of the input tape to the left and right of the read symbol. represent the portions of the memory tape to the left and right of the current symbol. is the symbol currently being read on the memory tape. The transition function

for this 1-tape machine takes the current state , the input symbol , and the memory symbol and produces a new state , a new memory symbol , and movement instructions and for the input and memory heads, respectively.

- If

, the input head stays in place, and and remain unchanged. - If

, the input head moves right, appending to , and if is empty, it is replaced with a blank symbol. - If

, the input head moves left, and the tape contents are adjusted accordingly. The movement

for the memory head follows similar rules, adjusting the position of the head on the memory tape.

- If

, the memory symbol remains unchanged, and the tape contents are updated accordingly. - If

, the memory symbol is replaced by , and if the right portion of the tape is empty, it is filled with a blank symbol. - If

, the memory symbol is replaced by , and the tape contents are adjusted accordingly.

Language of a Turing Machine

In formal language theory, a Turing Machine defines a language by determining whether a string is accepted by the machine. A string

Given the initial configuration:

where:

is the initial state, is the input string, and is the special symbol on the memory tape that marks the beginning of the computation

The machine processes the input, transitioning through a series of configurations until it reaches the final configuration

where:

is an accepting state from the set of final states , represents the processed input on the input tape, represents the contents of the memory tape at the time the machine halts.

The symbol

Thus, the language of the Turing Machine

A string

- Halt in a Non-Accepting State: The TM halts in a state

, meaning the machine has reached a state that is not part of the set of final accepting states. - Non-Halting (Infinite Loop): The TM never halts, which is an important case in the theory of computation. If the machine continues indefinitely without reaching a halting configuration, the input is not accepted. This case highlights one of the significant challenges in computation—deciding whether a machine will halt on a given input.

Similarity to Pushdown Automaton

The Turing Machine shares similarities with the Pushdown Automaton (PDA), specifically in the case where the PDA may not accept an input due to a “non-stopping run,” meaning the PDA does not halt. However, a critical question in theoretical computer science is whether there exists a loop-free Turing Machine, a machine that always halts regardless of the input. This leads into deeper exploration of decidability and the halting problem, which proves that no general algorithm can determine whether a Turing Machine halts for every possible input.

Example: Turing Machine for

Consider a Turing Machine that accepts the language

, where the machine must process an equal number of as,bs, andcs in that order. The machine operates as follows:

- It starts in an initial state

and scans the input tape for an a.- Once it finds an

a, it marks it and moves to state, where it scans for a corresponding b.- After finding a

b, it marks it and moves to state, where it scans for a corresponding c.- After processing a

c, it moves to state, checks for more input, and repeats the process if necessary. - Finally, when all

as,bs, andcs are processed, the machine moves to the accepting state.

The machine’s behavior can be visualized with the following state transitions:

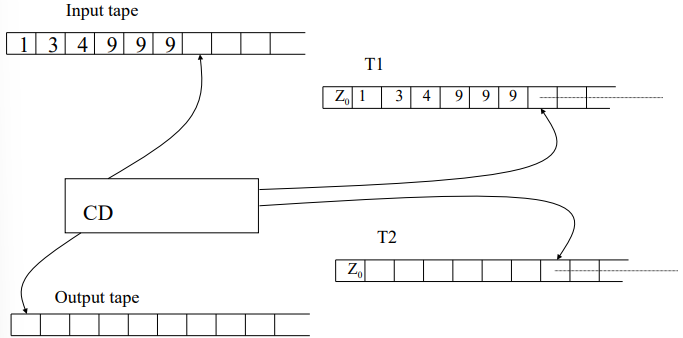

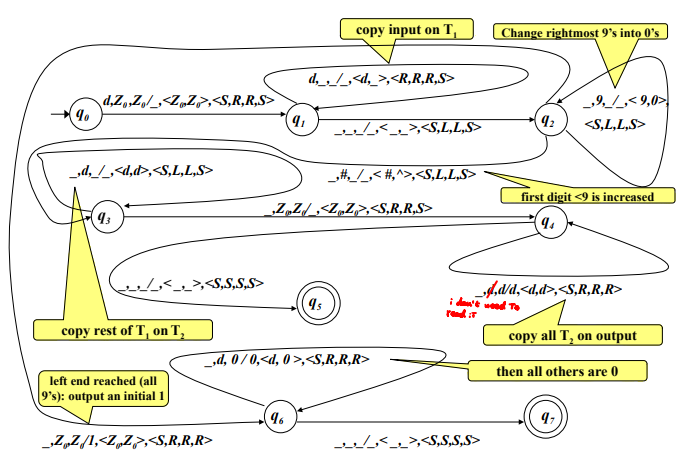

Example: Computing the Successor of a Number

Another example of a Turing Machine’s computational power is a machine that computes the successor of a number

, encoded in decimal digits. The machine uses two memory tapes ( and ) to perform the computation:

- The machine copies the digits of

from the input onto tape , to the right of the special symbol . Simultaneously, the head on tape moves to the corresponding position. - The machine then scans the digits on

from right to left, changing the digits as needed. For example, digits equal to 9 are turned into 0, and the first digit not equal to 9 is incremented by 1. All digits to the left of this first non-9 digit remain unchanged. - The updated number is copied from

to the output tape.

The key symbols used in this process are:

Symbol Meaning d Any decimal digit _ Blank # Any digit not equal to 9 ^ Successor of a digit denoted as # (in the same transition)

Closure Properties of Turing Machines

The closure properties of Turing Machines are an important aspect of understanding the computational power of these abstract machines. These properties describe the behavior of Turing Machines under various operations on languages, such as intersection, union, and concatenation.

For intersection, denoted by

This means that, given two Turing Machines

and , there exists another Turing Machine that accepts the language that is the intersection of the languages accepted by and .

Intuitively, a Turing Machine can simulate two other TMs either “in series” or “in parallel,” depending on the specific construction of the simulation. For example, a Turing Machine could interleave the steps of

Similarly, Turing Machines are closed under union (

Complementation of Turing Machines

However, when it comes to complementation, the situation is more complex. The complement of a language

If there existed a class of loop-free Turing Machines, i.e., machines that always halt regardless of the input, complementation would be straightforward. In this case, one could simply define the set of halting states and partition them into accepting and non-accepting states. By ensuring that halting states are disjoint from non-halting states, it would be possible to construct a Turing Machine that complements any language. However, since Turing Machines may enter non-terminating computations (i.e., infinite loops), it is impossible to define a general method to complement a language without addressing the issue of non-halting computations. This highlights one of the central limitations of Turing Machines.

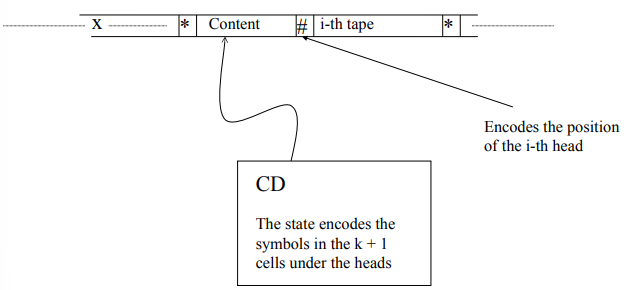

Universal Turing Machine

A Universal Turing Machine (UTM) is a special type of Turing Machine that can simulate any other Turing Machine. A key concept in the theory of computation, the UTM essentially embodies the idea of a general-purpose computer capable of running any algorithm provided as input. In contrast to specific Turing Machines designed to solve particular problems, the Universal Turing Machine takes as input a description of another Turing Machine

The UTM can be described as a single-tape Turing Machine, where the single tape serves multiple roles:

- Input: The machine receives the encoding of both the target machine

and the input to simulate. - Memory: The same tape is used to store intermediate results and configurations during the computation.

- Output: Once the computation is complete, the result of the simulation is also written on the same tape.

It is important to note that a single-tape Turing Machine is distinct from a Turing Machine with only one memory tape.

In the single-tape UTM, the input, memory, and output all occupy the same physical tape, while a standard multi-tape TM may have separate tapes for input, memory, and output.

Despite this distinction, all versions of Turing Machines are equivalent in terms of their computational power. In other words, regardless of how many tapes a Turing Machine uses, it can solve the same class of problems.

Turing Machines and Von Neumann Architecture

One of the fascinating aspects of the Universal Turing Machine is its relation to more “realistic” computing models, such as the Von Neumann architecture, which is the foundation for most modern computers. While the Turing Machine is an abstract model of computation, the Von Neumann machine is a concrete model of actual physical computers. Despite these differences, a Turing Machine can simulate a Von Neumann machine.

The primary distinction between the two models lies in the way they access memory:

- A Turing Machine accesses memory sequentially, moving its head one cell at a time.

- A Von Neumann machine, on the other hand, accesses memory in a random-access manner, allowing it to directly retrieve any memory location without sequential traversal.

This difference in memory access does not affect the computational power of the two models; both are capable of solving the same class of problems (i.e., both are Turing complete). However, it does influence the efficiency of computation. In particular, the time and space complexity of certain algorithms may differ significantly between a Turing Machine and a Von Neumann machine due to the different memory access methods. While Turing Machines are theoretically powerful, the sequential nature of memory access can lead to inefficiencies, especially for problems that benefit from random-access memory.

In the context of complexity theory, this distinction between memory access methods becomes important, as it affects the amount of time and memory required to solve a given problem. Therefore, while Turing Machines provide a fundamental model for understanding computation, the practical implications of computational complexity must consider factors such as memory access patterns and machine architecture.