Explainable AI (XAI)

Definition

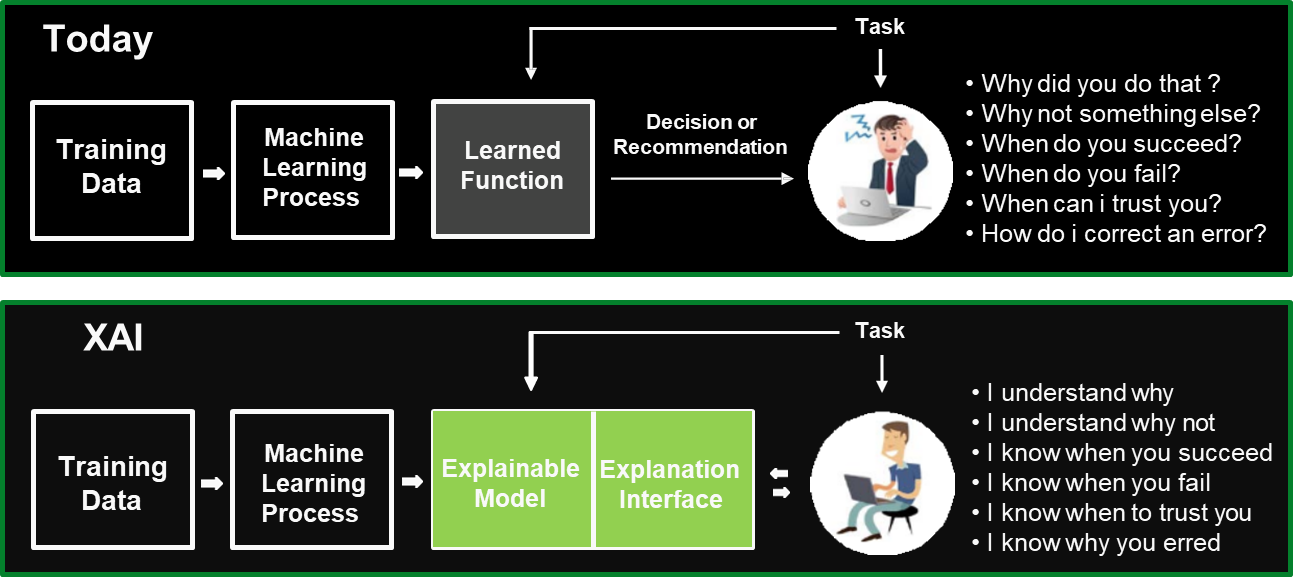

Explainable AI (XAI) is a field of research focused on developing techniques that enhance the interpretability of machine learning (ML) models. The goal of XAI is to provide insights into how AI systems make decisions, allowing humans to understand, trust, and validate model predictions.

Unlike traditional AI models, which often operate as “black boxes” where decision-making processes are opaque, XAI aims to offer transparency by explaining the reasoning behind predictions in human-understandable terms.

The core objectives of XAI include:

- Interpreting model predictions to understand why a specific decision was made.

- Providing human-comprehensible explanations that allow users to trust AI systems.

- Identifying biases and vulnerabilities to ensure fairness and accountability in AI-driven decisions.

By improving interpretability, XAI makes AI models more transparent, thereby increasing their trustworthiness and facilitating their adoption in critical applications such as healthcare, finance, and legal decision-making.

As machine learning models become more complex to achieve higher accuracy, they are increasingly treated as black-box systems where their internal workings remain largely unknown. This lack of transparency raises several concerns, particularly in domains where AI decisions impact human lives.

One of the main issues with black-box AI models is the presence of biases. If an AI system makes decisions that systematically favor or disadvantage certain groups, it can result in unethical and unfair outcomes. Ensuring accountability in AI-driven decision-making is crucial, especially when the system is used for applications that involve high-stakes decisions, such as criminal justice, hiring processes, or credit approval.

Moreover, explainability helps detect model vulnerabilities, enabling researchers and practitioners to identify and mitigate errors, ensuring that AI models operate reliably and fairly. By integrating explainability into AI development pipelines, organizations can debug, validate, and improve models while maintaining compliance with regulatory frameworks such as GDPR and CCPA (California Consumer Privacy Act).

Post-hoc Methods for AI Interpretability

Post-hoc interpretability techniques provide explanations after a model has been trained, offering insights into its decision-making process without altering its structure. These methods are particularly useful for models with nonlinear relationships or high data complexity, such as deep neural networks.

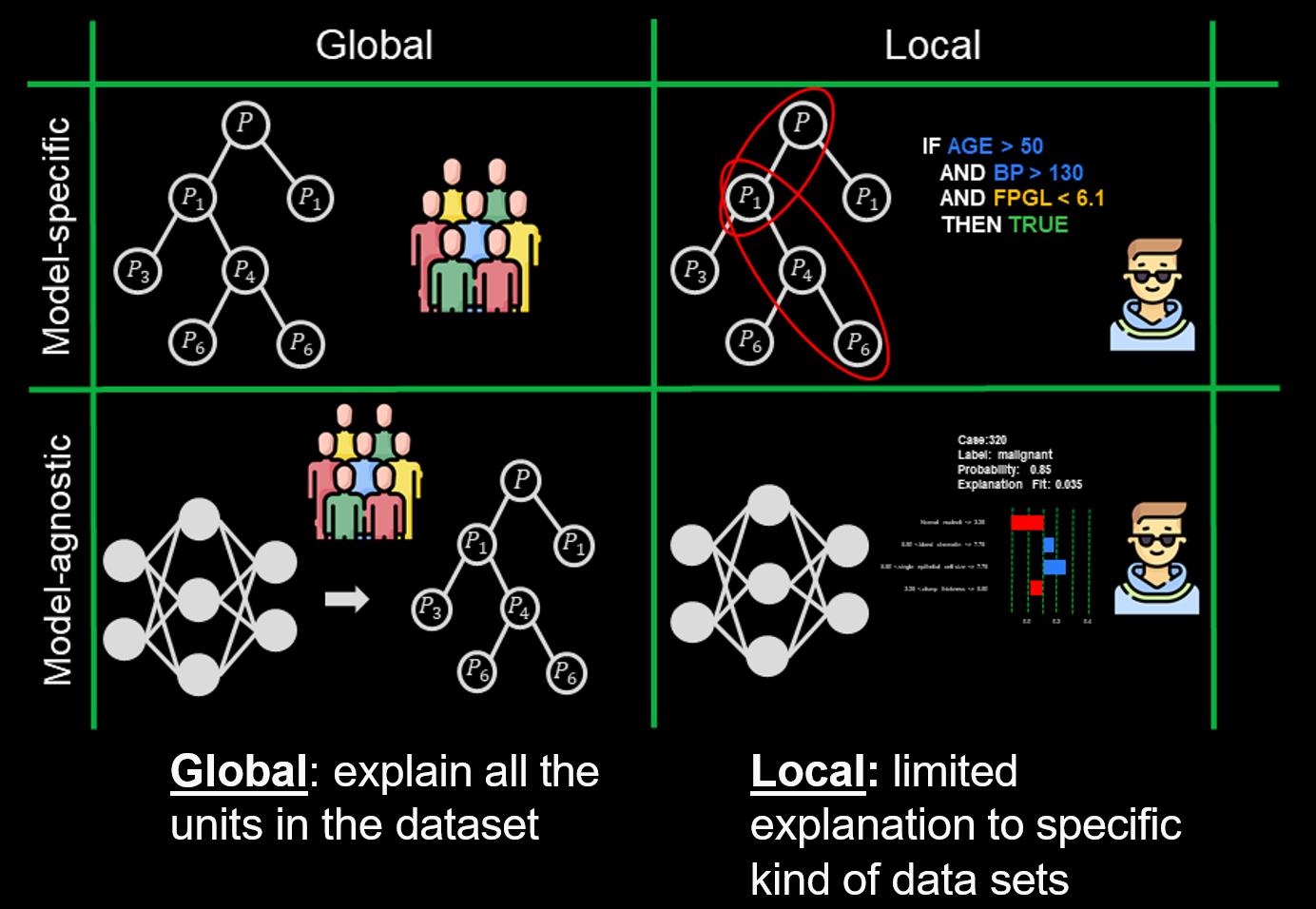

There are two major approaches to post-hoc explainability:

- Model-specific methods: These techniques work within the constraints of a particular learning algorithm and model structure, ensuring explanations align with the internal workings of the model.

- Model-agnostic methods: These approaches apply general analysis to compare model inputs with predictions, making them applicable to a wide range of AI models.

Several post-hoc methods are widely used to improve AI interpretability. These techniques help provide insights into how a model arrives at its predictions, making AI systems more transparent and accountable.

-

Feature Importance Analysis

This technique assigns an importance value to each input feature based on its contribution to the model’s prediction. By evaluating the influence of different variables, researchers can identify which features are most critical in determining an outcome. -

Surrogate Models

A surrogate model is a simpler, interpretable model (such as a decision tree) that approximates the behavior of a complex black-box model. By analyzing the surrogate model, users can gain insights into the broader decision-making patterns of the original AI system. -

Rule-based Explanations

These explanations express decision-making logic using if-then rules that describe how specific input conditions lead to particular outputs. This method is particularly useful for decision trees and rule-based classifiers, offering a transparent way to understand model behavior. -

Saliency Maps

Saliency maps highlight the most important input features (such as pixels in an image or words in a text) that influence a model’s prediction. These maps are commonly used in computer vision to show which parts of an image an AI model considers when making a classification.

The Benefits of Explainable AI

Incorporating XAI techniques into the AI development pipeline brings several advantages:

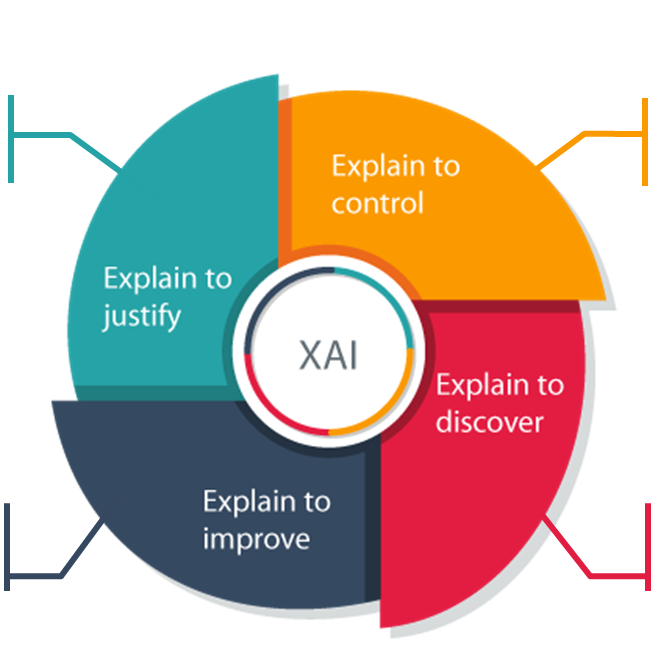

- Justification of System Behavior: By explaining AI decisions, XAI helps users detect hidden biases, vulnerabilities, and flaws in models. This is particularly valuable in domains where ethical and regulatory concerns are paramount.

- Performance Improvement: Explainability facilitates debugging and verification, allowing developers to refine AI models systematically and adopt structured engineering approaches.

- Increased Control and Compliance: Organizations can supervise AI models, ensuring they comply with regulations such as GDPR while making their usage more transparent.

- Validation of AI Reasoning: XAI enables researchers to compare an AI system’s decision-making process against human expectations, improving the reliability and credibility of the model.

Responsible AI

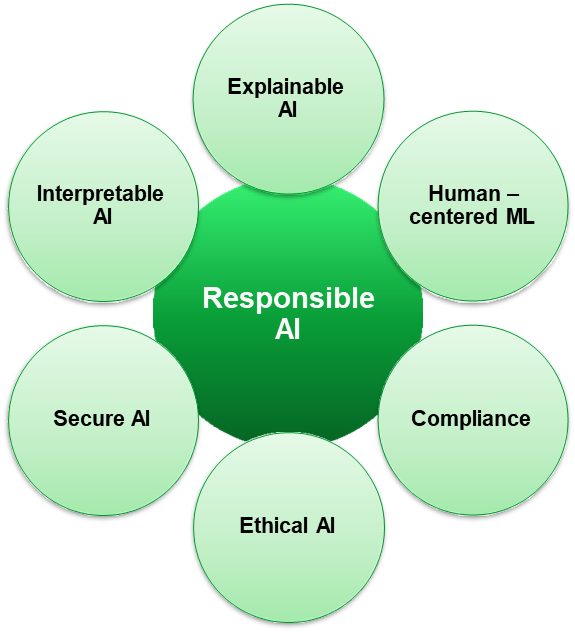

Explainable AI is an essential component of Responsible AI, which aims to ensure that AI systems operate fairly, securely, and ethically. Responsible AI encompasses multiple sub-disciplines, each addressing different aspects of trust and accountability in machine learning models.

- Explainable AI (XAI): Focuses on making AI decisions understandable and interpretable.

- Interpretable AI: Ensures that AI models remain transparent and human-comprehensible.

- Secure AI: Protects AI systems from cyberattacks and adversarial manipulations that could alter predictions.

- Ethical AI: Prevents bias and discrimination in AI models to ensure fairness across diverse user groups.

- Human-centered AI: Encourages human-AI collaboration rather than full automation, ensuring that AI assists decision-making rather than replacing human judgment.

- Compliance-Aware AI: Ensures that AI systems adhere to legal and regulatory standards, such as GDPR and CCPA, safeguarding user privacy and data protection.

Generative AI

Definition

Generative Artificial Intelligence (AI) is a specialized branch of AI focused on developing models that can generate new data samples resembling those found in a given training dataset.

Unlike traditional discriminative models that categorize or classify data, generative models learn the underlying structure and distribution of the data, enabling them to create entirely new instances. These models have demonstrated remarkable capabilities in producing realistic images, text, audio, and even videos, mimicking human creativity by extrapolating patterns from training examples.

The core idea behind generative AI is to model the probability distribution of a dataset in such a way that it can generate new samples that follow the same statistical properties as the original data.

This capability has led to a wide range of applications, from artistic content creation to scientific advancements in areas such as drug discovery and medical imaging.

Ethics and Social Implications

- Misinformation and Fake Content: Generative AI can create realistic synthetic content, raising concerns about misinformation, deepfakes, and media manipulation. Robust detection methods and ethical guidelines are needed to mitigate these risks.

- Privacy Concerns: AI models trained on large datasets may inadvertently reproduce sensitive information, highlighting the need for data security measures and privacy-preserving techniques.

- Bias and Fairness: Generative AI can perpetuate biases present in training data, leading to unfair outcomes. Addressing bias requires careful dataset curation and fairness-aware training techniques.

- Intellectual Property and Copyright: The rise of generative AI introduces legal questions about IP rights and copyright, particularly when AI-generated content resembles existing works.

- Security Risks and Cyber Threats: AI can be used for cybersecurity threats, such as generating phishing emails or deepfake videos. Stronger security protocols are needed to detect and mitigate these threats.

- Identity Theft and Fraud: AI-generated realistic text, voices, and images can be exploited for identity theft and fraud. Advancements in verification techniques are necessary to ensure digital identity integrity.

- Regulatory and Legal Challenges: The rapid advancement of AI technology has outpaced regulatory frameworks, creating legal uncertainties. Policymakers are working to establish AI regulations and achieve global consensus.

- Impact on Creative Industries: Generative AI can disrupt traditional creative industries by automating tasks, potentially displacing jobs. Integrating AI into creative workflows should enhance human creativity rather than replace it.

Types of Generative Models

Generative AI models come in various forms, each with unique architectures and mechanisms to learn and generate data. Some of the most prominent types include:

-

Variational Autoencoders (VAEs): These models use an encoder-decoder structure to learn a compressed representation of the data and then reconstruct it, generating new samples by sampling from a learned latent space. VAEs are particularly useful in applications such as image synthesis and anomaly detection.

-

Generative Adversarial Networks (GANs): GANs consist of two neural networks—the generator and the discriminator—that compete against each other in a zero-sum game. The generator creates new data samples, while the discriminator attempts to distinguish between real and generated samples. This adversarial process leads to the creation of highly realistic synthetic data, which has been widely used in image and video generation.

-

Autoregressive Models: These models generate data sequentially, predicting one element at a time based on previously generated elements. PixelCNN is used for image generation, while WaveNet has been instrumental in producing high-quality synthetic speech.

Each of these models has its strengths and weaknesses, depending on the specific application and computational requirements. While GANs excel in high-fidelity image generation, VAEs provide better control over the generated output, and autoregressive models perform well in sequential data generation, such as text and speech synthesis.

Applications of Generative AI

Image Generation and Editing

One of the most prominent applications of Generative AI is in the creation and manipulation of images. Generative Adversarial Networks (GANs), for example, are capable of producing highly realistic images, such as human faces, landscapes, and artwork, even when trained on limited data. These models can also perform image-to-image translation, enabling tasks such as style transfer, where an image adopts the artistic features of another, and colorization, where black-and-white images are transformed into colored versions. Beyond artistic applications, AI-powered image editing tools can seamlessly remove objects, enhance image resolution, and even generate missing portions of an image with impressive accuracy.

Text Generation and Summarization

Language models like GPT (Generative Pre-trained Transformer) have revolutionized the field of natural language processing (NLP) by enabling machines to generate coherent and contextually relevant text. These models can be used for a variety of tasks, including automated text generation, conversational AI, creative story writing, and text summarization, where they condense lengthy documents into concise overviews while preserving the key information. Such applications are widely used in journalism, content creation, and customer support automation.

Content Creation and Augmentation

Generative AI has expanded the possibilities for creative industries by enabling the automated generation of music, videos, and artwork. AI-driven tools can compose original melodies, generate entire paintings in the style of famous artists, and even create deepfake videos that superimpose one person’s face onto another with realistic results. Additionally, these models are useful for content augmentation, where they generate alternative variations of existing content or intelligently fill in missing sections to complete an incomplete composition.

Data Synthesis and Simulation

In many industries, acquiring high-quality and diverse data for training machine learning models is a significant challenge. Generative AI models can produce synthetic data that closely resembles real-world datasets, making them valuable for data augmentation, privacy-preserving synthetic data generation, and realistic simulations. This has important applications in healthcare, where AI-generated medical images help train diagnostic models without compromising patient privacy, and in autonomous driving, where simulated driving scenarios improve self-driving car algorithms without real-world risks. Additionally, generative models assist in financial forecasting and risk assessment by simulating potential market conditions.

Crafting Prompts

Interacting effectively with AI systems, especially large language models like GPT, requires well-structured prompts. Prompt engineering is the process of designing clear and precise inputs that guide the AI toward generating useful and relevant responses. Well-crafted prompts can significantly improve the accuracy, relevance, and creativity of AI-generated content.

Best Practices for Prompt Engineering

Be Specific

Clearly define the scope of your request to obtain focused and relevant responses. Instead of a vague prompt like “Tell me about dogs,” a more specific alternative would be “Describe the temperament and physical characteristics of Golden Retrievers.”Provide Context

Including background information or relevant details helps the AI generate better responses. For instance, if you need historical information about a specific event, mentioning relevant dates or figures can improve the quality of the answer.Ask Open-Ended Questions

To encourage detailed and insightful responses, frame prompts as open-ended questions. Instead of “Is climate change real?” try “What are the key scientific arguments supporting climate change?”Use Keywords for Guidance

Incorporate key terms related to your topic to steer the AI toward the desired content. If discussing machine learning, mentioning terms like neural networks, training data, overfitting, etc., will help generate a more informed response.Avoid Ambiguity

Clarity is essential when crafting prompts. Avoid overly broad or ambiguous questions that may lead to generic or misleading answers. For example, “Tell me about technology” is too broad, while “Explain how quantum computing differs from classical computing” is much more precise.Encourage Engagement

Designing prompts that encourage AI to provide suggestions, opinions, or advice can create a more dynamic interaction. For example, instead of “List some business ideas,” a more engaging approach could be “Based on current market trends, what innovative startup ideas could be successful?”Iterate and Refine

If the AI’s initial response is not satisfactory, refine your prompt by adding more details or restructuring your question. Experimenting with different phrasing, perspectives, or formats can lead to improved responses.Provide Feedback to AI

If your interaction with an AI system allows for iterative feedback, take advantage of it by guiding the model toward more accurate or insightful answers. If the response lacks depth, a follow-up prompt like “Can you elaborate on that point?” can encourage a more detailed reply.

Mastering prompt engineering is crucial for effectively leveraging AI’s capabilities in research, content creation, and problem-solving. A well-designed prompt ensures that AI-generated content aligns with user expectations, making interactions more productive and insightful.

Large Language Models (LLMs)

One of the most impactful applications of generative AI is in natural language processing, where Large Language Models (LLMs) have revolutionized text-based interactions.

Definition

A Large Language Model (LLM) is a type of artificial intelligence model designed to understand and generate human-like text based on the input it receives. These models are trained on vast amounts of text data, typically sourced from the internet or other large corpora, to learn the intricate patterns and structures of human language.

These models are typically built using transformer-based architectures, such as OpenAI’s GPT series. Transformers use self-attention mechanisms to process text in parallel, capturing contextual relationships between words more effectively than traditional recurrent neural networks (RNNs) or long short-term memory (LSTM) models.

Once trained, an LLM can perform a wide range of tasks, including:

- Text Generation: Producing human-like text based on prompts, useful in content creation, storytelling, and automated report writing.

- Text Completion and Summarization: Completing incomplete sentences or summarizing long documents efficiently.

- Translation and Multilingual Understanding: Translating text between languages while preserving meaning and nuance.

- Question Answering and Chatbots: Powering AI-driven virtual assistants capable of understanding and responding to human queries.

- Sentiment Analysis and Text Classification: Analyzing emotions in customer reviews, social media posts, or emails to extract insights.

The ability of LLMs to generate coherent and contextually relevant text has led to their widespread adoption in various industries, including marketing, customer service, and academic research. However, challenges remain in ensuring factual accuracy, avoiding biases, and preventing AI-generated misinformation.

Multimodal Generative AI

Definition

Multimodal generative AI refers to artificial intelligence systems that can process and generate content across multiple types of data, such as text, images, and audio, simultaneously. Unlike traditional AI models that specialize in a single modality, multimodal generative AI integrates information from different sources to create richer, more context-aware outputs.

These systems rely on advanced deep learning architectures, such as transformers and diffusion models, to establish relationships between different data types and generate cohesive content.

One of the key advantages of multimodal AI is its ability to achieve cross-modal understanding, meaning it can interpret and relate data across different modalities. For instance, a multimodal model can generate a descriptive caption for an image, convert a textual prompt into a corresponding visual representation, or even create an audio narration based on a given text and image. This capability significantly enhances applications in creative content generation, accessibility tools, human-computer interaction, and automated media production.

To develop multimodal AI systems, large-scale datasets containing diverse and well-annotated examples across multiple modalities are required. These datasets often include paired data, such as images with corresponding text descriptions or video content with synchronized subtitles and audio transcripts. The training process involves learning the complex relationships between different data types, enabling the AI model to understand and generate meaningful cross-modal content. Modern architectures such as CLIP (Contrastive Language-Image Pretraining), DALL-E, and Flamingo exemplify the success of multimodal AI in bridging text and vision-based tasks.

Example

The implementation of multimodal generative AI has vast implications across various industries. In healthcare, it can aid in medical imaging analysis by integrating textual medical reports with diagnostic images. In education, it facilitates interactive learning experiences by generating educational videos and explanatory diagrams from textual material. In entertainment, it can be used to generate animated content, compose music based on textual descriptions, and create immersive virtual experiences.

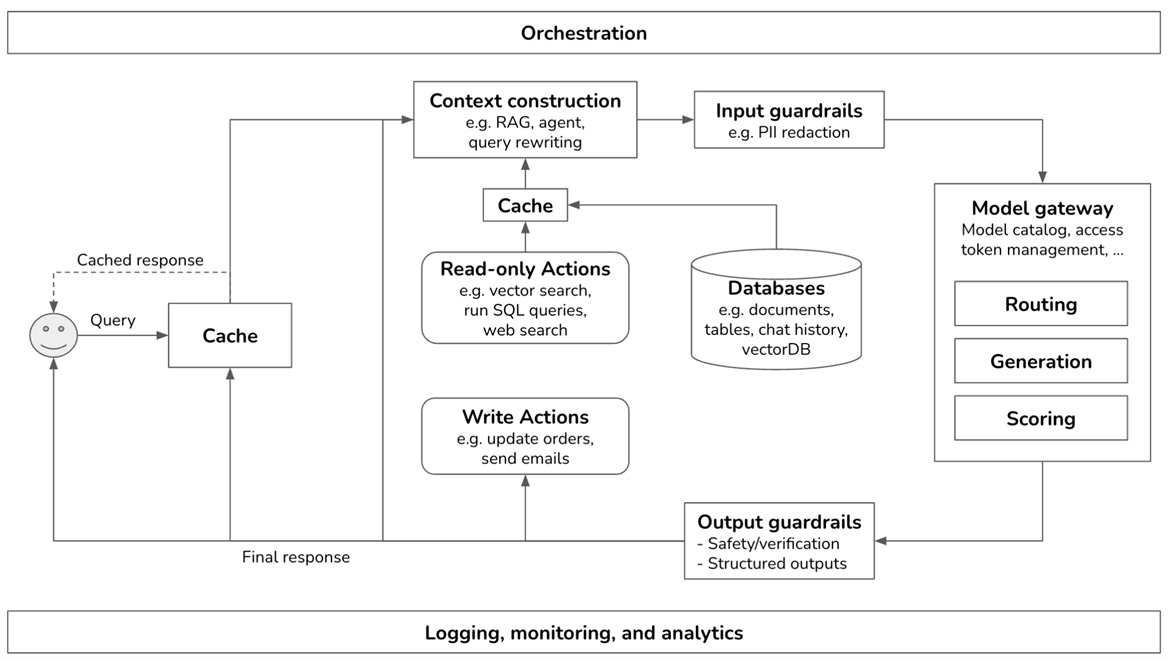

Platforms and Logical Modules

A Generative AI (GenAI) platform is a comprehensive framework designed to support the development, deployment, and management of generative AI models. These platforms integrate multiple technical components and logical modules to ensure efficient AI model operation, security, scalability, and compliance with enterprise requirements.

One critical component of a GenAI platform is cost monitoring, which helps manage and optimize computational resources. Training and deploying large-scale generative models require significant GPU or TPU power, making cost analysis essential for balancing performance and expenditure. Advanced platforms use automated resource scaling, cost tracking dashboards, and model efficiency optimizations to control expenses.

Security and observability play a vital role in maintaining the integrity of AI operations. Generative models are susceptible to data leakage, adversarial attacks, and misuse, necessitating robust security frameworks. Observability tools provide real-time monitoring of model performance, error analysis, and anomaly detection to ensure reliable operation. AI platforms often integrate zero-trust security models, role-based access control (RBAC), and encryption techniques to protect sensitive data.

The vector database (Vector DB) layer is another essential component, especially in retrieval-augmented generation (RAG) systems. Unlike traditional databases that store structured data, vector databases store numerical representations of unstructured data, such as images and text embeddings. This enables fast and efficient similarity searches, allowing generative AI models to retrieve relevant contextual information dynamically. Technologies such as FAISS (Facebook AI Similarity Search) and Pinecone are widely used in vector search implementations.

Feedback mechanisms are crucial for continuous model improvement. AI platforms integrate human-in-the-loop (HITL) systems, reinforcement learning from human feedback (RLHF), and automated feedback collection methods to refine model outputs. This iterative approach ensures that AI-generated content aligns with user expectations and ethical standards.

A key functionality of modern GenAI platforms is Retrieval-Augmented Generation (RAG) and AI agent capabilities. RAG enhances text generation by retrieving relevant external knowledge before generating responses, significantly improving the accuracy and contextual relevance of AI outputs. AI agents leverage multimodal inputs and reinforcement learning to perform complex tasks, such as automated report generation, conversational AI interactions, and autonomous decision-making in business processes.

Guardrails are essential for preventing undesired or harmful AI behaviors. These include content filtering systems, bias detection algorithms, and ethical AI constraints to ensure that generative models produce fair, safe, and appropriate outputs. Implementing guardrails is especially important in regulated industries such as finance, healthcare, and legal services, where AI-generated content must comply with strict governance policies.

The LLM (Large Language Model) gateway and catalog serve as the access point for managing different generative models within an enterprise. The gateway enables API-based interaction with various LLMs, allowing businesses to deploy multiple models for different use cases. The catalog maintains metadata, versioning, and model lineage, ensuring traceability and reproducibility of AI outputs.

Overall, a well-designed GenAI platform integrates these logical components to provide a scalable, secure, and efficient AI environment for enterprises and researchers. By leveraging multimodal AI capabilities, retrieval-augmented generation, and robust security measures, organizations can harness the full potential of generative AI while mitigating risks and ensuring responsible AI deployment.

Technical Considerations

Building and deploying generative AI models requires careful consideration of several technical aspects:

- Data Preprocessing: Generative models require large and high-quality datasets to learn meaningful patterns. Preprocessing steps such as normalization, augmentation, and noise reduction play a crucial role in model performance.

- Model Selection and Training: Choosing the appropriate generative architecture depends on the task. GANs, for example, require adversarial training, while VAEs involve probabilistic inference. Training deep generative models is computationally expensive and often requires GPUs or TPUs.

- Hyperparameter Tuning: Parameters such as learning rates, latent space dimensionality, and network architecture must be carefully tuned to achieve high-quality outputs. Techniques like gradient clipping, batch normalization, and adversarial training stabilization help improve model performance.

- Evaluation Metrics: Assessing the quality of generated data is challenging, as traditional accuracy metrics do not apply. Instead, metrics such as Inception Score (IS), Fréchet Inception Distance (FID), and Structural Similarity Index (SSIM) are used to measure the realism and diversity of generated samples.

Generative AI in the Enterprise

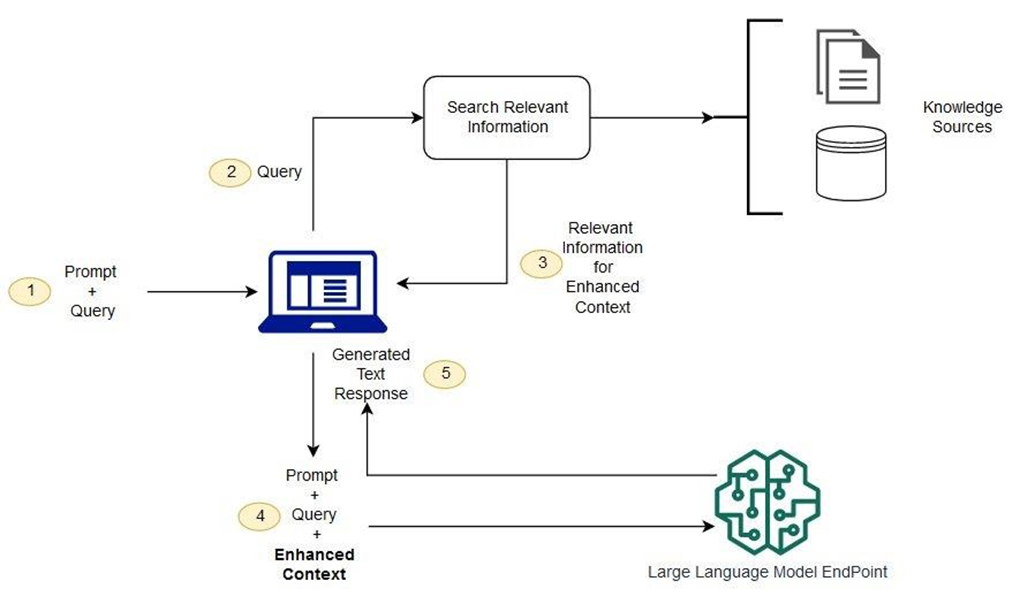

Retrieval-Augmented Generation (RAG) is an advanced natural language processing (NLP) technique that enhances generative models by integrating external knowledge retrieval. Instead of relying solely on pre-trained model knowledge, RAG retrieves relevant information from a document repository, database, or API before generating a response. This improves the accuracy, coherence, and factual consistency of AI-generated content.

In a RAG-based system, the process follows two main steps:

- Retrieval Phase – The AI system searches a large corpus of text, retrieving relevant passages related to the input query.

- Generation Phase – The generative model (such as GPT) incorporates the retrieved information to generate a more informed and contextually accurate response.

By using RAG, businesses can leverage internal knowledge bases—such as customer support documents, legal contracts, or company manuals—to provide more relevant and organization-specific AI responses. This approach is particularly useful for industries requiring high levels of factual accuracy, such as legal, healthcare, and finance.

RAG in AWS

AWS provides enterprise-grade tools for implementing RAG-based AI solutions. By combining Amazon Kendra (a powerful enterprise search engine) with Amazon Bedrock, businesses can deploy AI models that retrieve and generate content with enhanced contextual awareness. AWS services enable organizations to integrate secure, scalable, and compliant AI-driven retrieval mechanisms, ensuring that AI-generated responses remain accurate, reliable, and tailored to specific business needs.

Common enterprise applications of RAG in AWS include:

- Automated Customer Support – Enhancing AI chatbots with accurate, up-to-date company knowledge.

- Legal and Compliance Document Analysis – Retrieving relevant legal clauses or compliance policies for AI-driven legal assistance.

- Healthcare Knowledge Management – Providing AI-generated medical responses based on verified scientific literature and clinical guidelines.

- Business Intelligence and Reporting – AI-assisted data retrieval for decision-making in finance, marketing, and operations.

The future of enterprise AI lies in combining generative capabilities with structured retrieval systems like RAG, ensuring that AI-powered solutions remain factually accurate, domain-specific, and adaptable to dynamic business needs.