What is Artificial Intelligence?

Artificial Intelligence (AI) is a comprehensive and rapidly evolving field of study concerned not only with understanding the principles of intelligence but also with the engineering of intelligent entities.

Definition

At its core, AI is the science of building machines that can perceive their environment, reason about their knowledge, and compute how to act effectively and safely, particularly in novel situations.

The pursuit of AI can be broadly framed by two fundamental perspectives, which mirror the historical distinction between the mind as an abstract process and the brain as a physical object:

- Modeling the Mind: This approach concentrates on the symbolic and reasoning aspects of intelligence. It seeks to model the high-level cognitive processes of conscious thought, problem-solving, and logical deduction.

- Modeling the Brain: This approach draws inspiration from neuroscience, attempting to replicate intelligence by simulating the underlying physical structures and electrochemical processes of the biological brain, such as the network of neurons.

Given these different motivations, there is no single, universally accepted definition of what AI is. Based on the field of interest, various definitions have been proposed over the years. Instead, definitions are often categorized along two critical dimensions: the first distinguishes between thought processes and reasoning versus behavior and action, while the second contrasts fidelity to human performance with adherence to an ideal concept of rational performance. This framework yields four distinct approaches to AI, each with its own methodologies and goals.

| Human Performance | Rational Performance | |

|---|---|---|

| Thinking | Systems that think as humans | Systems that think rationally |

| Acting | Systems that act as humans | Systems that act rationally |

Systems that Think as Humans

This approach, known as the cognitive modeling approach, aims to construct systems that emulate human thought processes. To achieve this, one must first understand how humans think, a central question in the interdisciplinary field of cognitive science.

Researchers in this area employ several methods, including introspection (the attempt to observe one’s own mental processes), the observation of subjects in psychological experiments, and the analysis of brain activity through imaging techniques.

The ultimate goal is to create a sufficiently precise theory of the mind that can be expressed as a computer program.

A system is considered successful if its input-output behavior and the timing of its reasoning steps correspond to those of a human.

A classic example of this is the General Problem Solver (GPS) by Newell and Simon, which was designed to solve problems in a way that explicitly mirrored the protocols of human problem-solvers.

Systems that Think Rationally

This approach is centered on the “laws of thought,” striving to build systems that engage in “correct” or irrefutable reasoning. Rooted in a logicist tradition that dates back to the philosopher Aristotle and his syllogisms, this perspective defines intelligence as adherence to formal logical principles.

Definition

A system is considered rational if it thinks in accordance with a set of logical rules that guarantee correct conclusions from correct premises.

This view treats rationality as an ideal, mathematically defined standard. While powerful for problems that can be described with certainty, this approach’s reliance on formal logic presents challenges in the real world, which is inherently uncertain. Rational thought alone is often insufficient to produce intelligent behavior in complex environments.

Systems that Act as Humans

This perspective defines intelligence through action and is most famously embodied by the Turing test approach. Proposed by Alan Turing in 1950, this paradigm sidesteps the philosophical debate about consciousness and “thinking” by focusing on performance.

Definition

A machine is deemed intelligent if it can interact with a human interrogator and produce responses that are indistinguishable from those of another human.

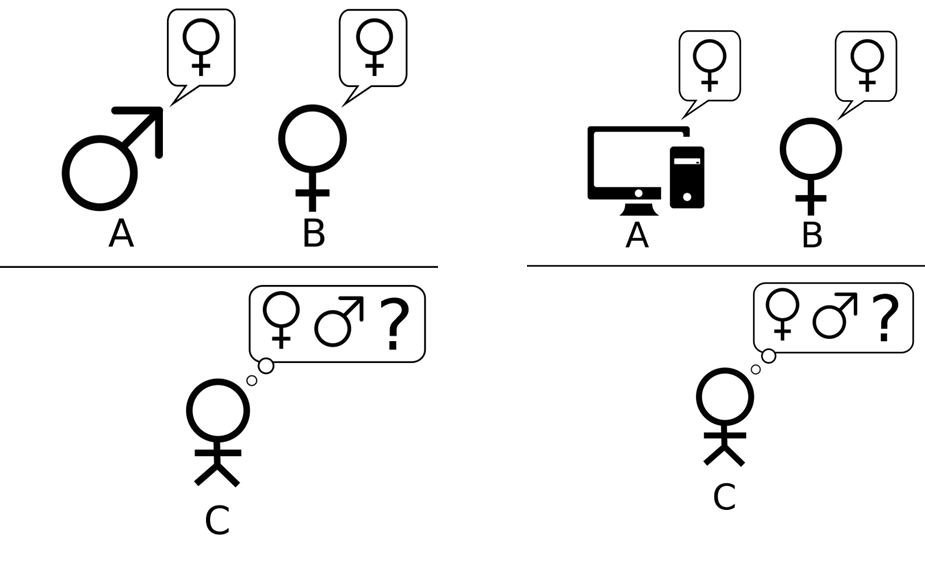

The Original Imitation Game was conceived as a thought experiment by Alan Turing. In this setup, an interrogator (

Building on this idea, Turing proposed the Turing Test as a criterion for machine intelligence. Here, the man (

The objective is not necessarily to replicate human thought, but to produce an external behavior that is convincingly human-like.

Systems that Act Rationally

This approach, known as the rational agent approach, has become the standard model in modern AI research. It focuses on the design and construction of agents—entities that perceive and act—that operate to achieve the best possible outcome, or the best expected outcome when faced with uncertainty.

Definition

A Rational Agent acts purposefully to achieve its goals, given its beliefs and knowledge.

This paradigm is more general than the “laws of thought” approach, as correct inference is just one of several mechanisms for achieving rationality. It is also more amenable to scientific development than human-centric approaches because the standard of rationality is mathematically well-defined and can be engineered. This perspective has proven to be the most successful and pervasive, forming the foundation of modern AI applications.

The Birth and Scope of Artificial Intelligence

The formal establishment of Artificial Intelligence as an academic discipline is widely attributed to a workshop held at Dartmouth College during the summer of 1956. Organized by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, the event brought together the foremost researchers in the emerging fields of automata theory, neural nets, and intelligence simulation. The workshop’s proposal, penned by McCarthy in 1955, articulated the field’s foundational premise, which remains its guiding principle:

Quote

The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.

McCarthy, 1955

This conjecture established the ambitious vision for AI: to create machines capable of performing any intellectual task that a human can.

The Research Area of AI

AI is a vast, interdisciplinary research area that encompasses numerous subfields and overlaps with many other scientific and engineering disciplines. Its internal structure can be understood as a hierarchy of progressively more specialized concepts.

At the highest level is Artificial Intelligence (AI), the overarching field dedicated to creating intelligent machines. A significant subfield of AI is Machine Learning (ML), which focuses on developing systems that can learn and improve their performance from data and experience without explicit programming. Within machine learning, Neural Networks (NN) represent a class of models inspired by the structure and function of the human brain, using interconnected nodes, or artificial neurons, to process information. A powerful and currently dominant subset of neural networks is Deep Learning (DL), which utilizes networks with many layers (deep architectures) to learn intricate patterns and representations from vast amounts of data. More recently, Generative AI has emerged from deep learning, focusing on models designed to create new, original content, such as images, music, and text. The most prominent examples of this today are Large Language Models (LLMs), which are specialized generative models trained on massive text corpora to understand, generate, and interact using human language.

AI’s methods and goals connect it to a wide range of other disciplines:

- Data Science and Data Mining: These fields are deeply intertwined with AI, particularly Machine Learning. Data science applies scientific methods to extract knowledge from data, while data mining focuses on discovering novel patterns in large datasets. The rise of “big data” has further blurred the lines, as AI techniques are essential for analysis at this scale.

- Robotics: This field is concerned with the design, construction, and operation of robots. The subfield of Autonomous Robotics exists at the intersection of AI and robotics, focusing on creating machines that can operate independently in the physical world. AI provides the “brain”—the perception, planning, and decision-making capabilities—for the robot’s physical body.

- Optimization: This mathematical field is dedicated to finding the best possible solution from a set of alternatives under given constraints. Many AI problems, from planning a route to training a neural network, are fundamentally optimization problems. The fields of operations research and control theory have contributed significantly to the notion of rational agents in AI.

Rational Agents

The Agent-Environment Paradigm

In the study of Artificial Intelligence, a foundational concept is the agent-environment paradigm. This framework provides a unified way to understand and design intelligent systems.

Definition

An agent is defined as any entity that perceives its environment through sensors and acts upon that environment through actuators. The environment is the world in which the agent operates, which can be a physical reality, such as the road network for a self-driving car, or a virtual one, like a simulated stock market for an automated trading program.

The agent receives sensory inputs, or percepts, and in response, performs actions that can alter the state of the environment.

The behavior of an agent is mathematically described by its agent function, which maps any given sequence of percepts to a specific action. If we denote the action at time

This function is an abstract representation of the agent’s complete behavior. The central challenge in AI is to design agent functions that lead to desirable outcomes. This leads to the concept of rationality, which provides a formal way to define what it means for an agent to “do the right thing.”

Definition

A rational agent is one that, for every possible sequence of perceptions, chooses an action that is expected to maximize its performance measure, given the evidence provided by the percept sequence and any built-in knowledge the agent possesses.

Characterizing Agents and Environments

To design a rational agent effectively, one must first develop a comprehensive specification of its task environment. This is systematically achieved using the PEAS framework, which involves defining the Performance measure, Environment, Actuators, and Sensors.

Examples of agent types and their PEAS descriptions

Agent Type Performance Measure Environment Actuators Sensors Medical diagnosis system Healthy patient, reduced costs Patient, hospital, staff Display of questions, tests, diagnoses Touchscreen/voice entry of symptoms Satellite image analysis Correct categorization of objects Orbiting satellite, downlink, weather Display of scene categorization High-resolution digital camera Part-picking robot Percentage of parts in correct bins Conveyor belt with parts; bins Jointed arm and hand Camera, tactile sensors Refinery controller Purity, yield, safety Refinery, raw materials, operators Valves, pumps, heaters, stirrers Temperature, pressure, flow sensors Interactive English tutor Student’s score on test Set of students, testing agency Display of exercises, feedback Keyboard entry, voice

Task environments can be classified along several key dimensions, which profoundly influence the design of the agent.

Property

- Observable: An environment is fully observable if the agent’s sensors provide access to the complete state of the environment at each point in time. In a partially observable environment, the agent cannot see the full state, perhaps due to noisy sensors or occluded parts of the world.

- Agents: In a single-agent environment, the agent operates by itself. A multi-agent environment features other agents who may be cooperative or competitive, fundamentally changing the decision-making process.

- Determinism: An environment is deterministic if the next state is perfectly predictable given the current state and the agent’s action. In a stochastic environment, outcomes are probabilistic, while a non-deterministic environment has unpredictable outcomes without specified probabilities.

- Temporality: In an episodic environment, the agent’s experience is divided into independent episodes, where the action in one episode does not affect the next. In a sequential environment, the current choice has long-term consequences.

- Change: A static environment does not change while the agent is deliberating. A dynamic environment can change independently of the agent, requiring it to act in a timely manner.

- Data Type: The state of the environment, time, and the agent’s percepts and actions can be discrete (finite set of values) or continuous (a range of values).

- Knowledge: In a known environment, the agent understands the “rules of the game”—the outcomes of its actions. In an unknown environment, the agent must learn how the world works.

Example

- For example, a game of chess is a fully observable, multi-agent, deterministic, sequential, and discrete environment.

- In contrast, an automated taxi driver operates in a partially observable, multi-agent, stochastic, sequential, dynamic, and continuous environment, which represents one of the most challenging cases for AI.

Examples of task environments and their characteristics.

Task Environment Observable Agents Deterministic Episodic Static Discrete Crossword puzzle Fully Single Deterministic Sequential Static Discrete Chess with a clock Fully Multi Deterministic Sequential Semi Discrete Poker Partially Multi Stochastic Sequential Static Discrete Backgammon Fully Multi Stochastic Sequential Static Discrete Taxi driving Partially Multi Stochastic Sequential Dynamic Continuous Medical diagnosis Partially Single Stochastic Sequential Dynamic Continuous Image analysis Fully Single Deterministic Episodic Semi Continuous Part-picking robot Partially Single Stochastic Episodic Dynamic Continuous Refinery controller Partially Single Stochastic Sequential Dynamic Continuous English tutor Partially Multi Stochastic Sequential Dynamic Discrete

Performance Measure

The performance measure is the objective criterion for judging the success of an agent’s behavior. It must be carefully defined by the agent’s designer, as the agent will rationally optimize for this measure, even if it leads to unintended consequences. A well-designed performance measure focuses on what one wants to be achieved in the environment, rather than on how one thinks the agent should behave.

Agent Programs

While the agent function is an abstract mapping, the agent program is the concrete implementation that runs on the agent’s physical hardware, or architecture. The central challenge for AI is to create compact and efficient agent programs that produce rational behavior without resorting to impossibly large lookup tables.

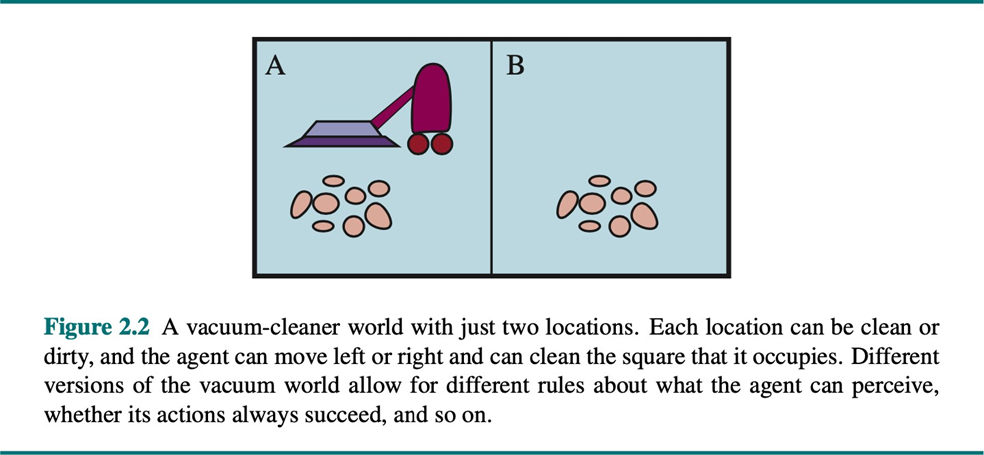

The vacuum-cleaner world, a simple environment with two locations (

Types of Agent Programs

- Table-Driven Agent: This is the most straightforward implementation of an agent function. It uses a pre-computed lookup table that stores the appropriate action for every possible sequence of percepts. While conceptually simple, this approach is practically infeasible for all but the most trivial environments due to the astronomical number of possible percept sequences, which leads to a table of unmanageable size.

function TABLE-DRIVEN-AGENT(percept) returns an action

persistent: percepts, a sequence, initially empty

table, a table of actions, indexed by percept sequences

append percept to the end of percepts

action <- LOOKUP(percepts, table)

return action

- Simple Reflex Agent Program: This agent acts based solely on the current percept, ignoring the history of previous percepts. It uses a set of condition-action rules (if-then rules) to map the current state directly to an action. For the vacuum world, this is a highly efficient approach.

function REFLEX-VACUUM-AGENT([location, status]) returns an action

if status = Dirty then return Suck

else if location = A then return Right

else if location = B then return Left

The program we choose must be appropriate for the agent’s architecture (the physical hardware it runs on), because we don’t want to build a supercomputer to run a simple vacuum cleaner!

The key challenge is to write programs that produce rational behavior from a smallish program. This means

Agent Architectures

During the early days of AI, researchers explored various architectures for building intelligent agents. These architectures can be broadly categorized into four types, each with increasing levels of complexity and capability:

- Simple Reflex Agents: These agents make decisions based on the current state of the world as perceived by their sensors. Their internal logic consists of a set of condition-action rules.

- Model-Based Reflex Agents: To overcome the limitations of simple reflex agents, these agents maintain an internal state to keep track of aspects of the world that are not currently observable. This state is updated over time using models of how the world works.

- Goal-Based Agents: These agents are capable of considering the future by reasoning about the consequences of their actions. They use their model of the world to project how different action sequences will change the environment and choose a sequence that leads to a desirable goal state.

- Utility-Based Agents: In many complex environments, achieving a goal is not sufficient; some paths to the goal are better than others. A utility function provides a more nuanced way to guide behavior by mapping a state or sequence of states to a real number that represents a degree of “happiness” or desirability. A utility-based agent chooses the action that maximizes the expected utility, allowing it to make rational decisions in the face of uncertainty and to handle trade-offs between conflicting objectives.

Simple Reflex Agents

These agents make decisions based on the current state of the world as perceived by their sensors. Their internal logic consists of a set of condition-action rules.

function SIMPLE-REFLEX-AGENT(percept) returns an action

persistent: rules, a set of condition-action rules

state <- INTERPRET-INPUT(percept)

rule <- RULE-MATCH(state, rules)

action <- rule.ACTION

return action

Example

- For example, an early Roomba vacuum might operate on the simple rule, “if an obstacle is detected, change direction.”

- Similarly, the web service IFTTT (If This Then That) allows users to create simple rules like, “IF I am tagged in a photo on social media, THEN save the photo to my cloud storage.”

However, these agents are limited because they cannot handle partial observability; if the environment requires memory of past events to make a correct decision, a simple reflex agent will fail.

Model-Based Reflex Agents (Reflex Agents with State)

To overcome the limitations of simple reflex agents, a model-based agent maintains an internal state to keep track of the aspects of the world it cannot currently perceive. This state is updated over time using two models:

- a transition model, which describes how the world evolves independently and as a result of the agent’s actions,

- and a sensor model, which describes how the state of the world is reflected in the agent’s percepts.

This architecture allows the agent to function effectively in partially observable environments.

function MODEL-BASED-REFLEX-AGENT(percept) returns an action

persistent: state, the agent's current conception of the world state

transition_model, a description of how the next state depends on state and action

sensor_model, a description of how the world state is reflected in percepts

rules, a set of condition-action rules

action, the most recent action

state <- UPDATE-STATE(state, action, percept, transition_model, sensor_model)

rule <- RULE-MATCH(state, rules)

action <- rule.ACTION

return action

Example

For instance, modern Roomba vacuums use SLAM (Simultaneous Localization and Mapping) to build an internal map of a room (the state) as they clean, allowing them to navigate intelligently and ensure complete coverage even without seeing the entire room at once.

Goal-Based Agents

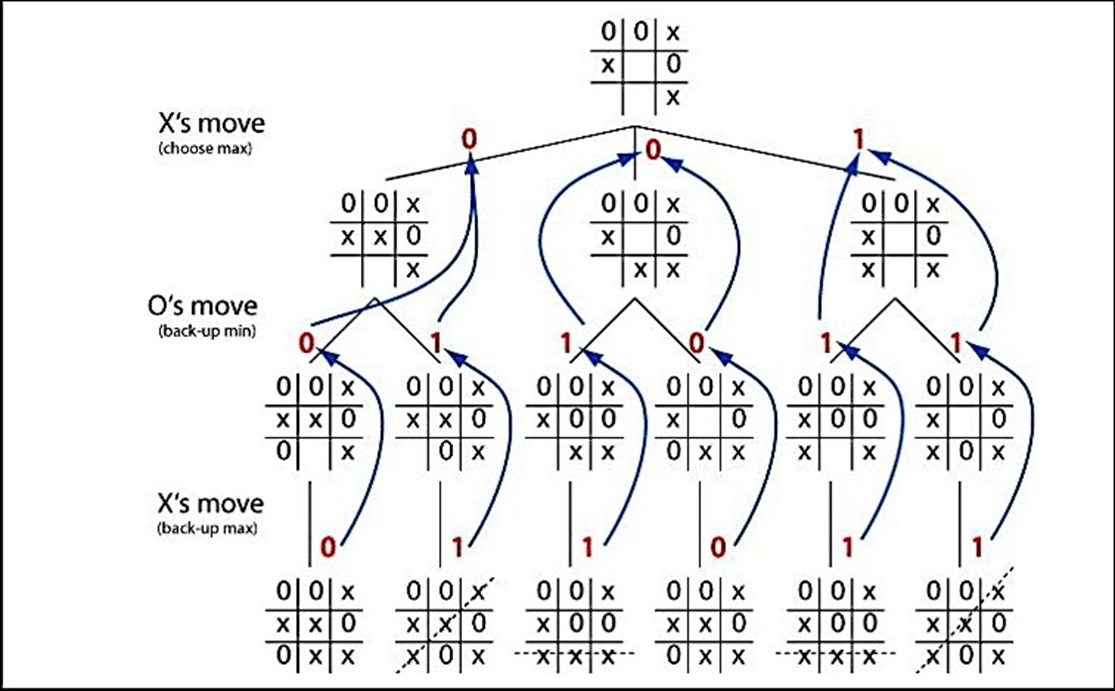

While knowing the current state is useful, rational behavior often requires having a goal. Goal-based agents are capable of considering the future by reasoning about the consequences of their actions. They use their model of the world to project how different action sequences will change the environment, and then choose a sequence that leads to a desirable goal state. This often involves search and planning algorithms.

Example

An AI playing a game like Tic-Tac-Toe, for example, does not simply react to the opponent’s last move; it explores a tree of possible future moves to find a path that leads to a winning configuration.

Utility-Based Agents

In many complex environments, achieving a goal is not sufficient; some paths to the goal are better than others. A utility function provides a more nuanced way to guide behavior by mapping a state or sequence of states to a real number that represents a degree of “happiness” or desirability. A utility-based agent chooses the action that maximizes the expected utility, allowing it to make rational decisions in the face of uncertainty and to handle trade-offs between conflicting objectives.

For example, a self-driving car's goal is to reach a destination, but a utility function allows it to weigh factors like travel time, safety, fuel consumption, and passenger comfort to select the optimal route, not just any valid route. This ability to balance multiple preferences is essential for generating high-quality, intelligent behavior.